Efficient Reinforcement Learning Algorithm For Complex Environments (No. 0198)

|

|

<< Back to all technologies |

Summary

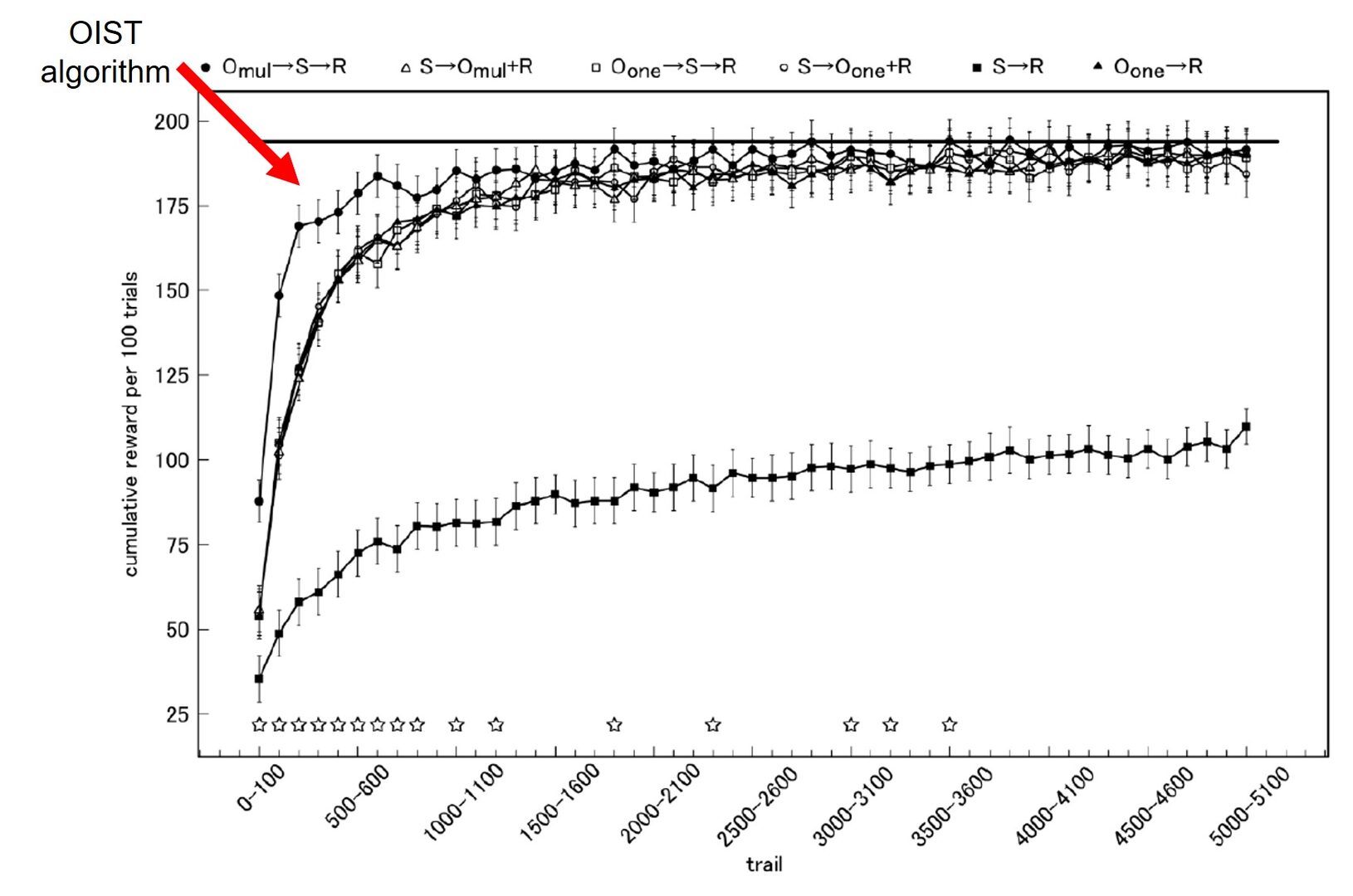

A computationally efficient reinforcement learning algorithm for complex environments is provided.

The machine learning market is a multibillion dollar market and is expected to rapidly grow at a Compound Annual Growth Rate (CAGR) of 44.1%. Machine learning enabled solutions are being significantly adopted by organizations worldwide to enhance customer experience. In the coming years, applications of machine learning in various industry verticals (web services, robotics, data analysis) is expected to rise exponentially. However, the conventional methods are inefficient in a complex environment involving a huge amount of task-irrelevant information. Here we present a promising reinforcement learning algorithm developed by a group of researchers including Prof. Tomoki Fukai. This algorithm gives computational principles to efficiently learn optimal behavior with a small number of trials. This results in a computationally efficient algorithm requiring minimal memory.

Applications

- Web services

- Robotics

- Enterprise data analysis

Advantages

- Computationally efficient

- Low memory load

Technology

The algorithm is based on 1) Reward-based inference: Instead of predicting the next state from the current state, this method uses the state as input to predict the reward. 2) Efficient state mapping rule: This method assigns a state to a combination of multiple observations rather than to each single observation, and 3) Thompson sampling over uncertain posterior states: The Bellman equation coupled with a sampling of posterior states enables the choice of adequate actions.

Media Coverage and Presentations

CONTACT FOR MORE INFORMATION

![]() Technoogy Licensing Section

Technoogy Licensing Section

OIST Innovation

![]() tls@oist.jp

tls@oist.jp

![]() +81(0)98-966-8937

+81(0)98-966-8937