Environmental Control based on Reinforcement Learning Algorithm (No. 0123, 0124)

|

|

<< Back to all technologies |

Summary

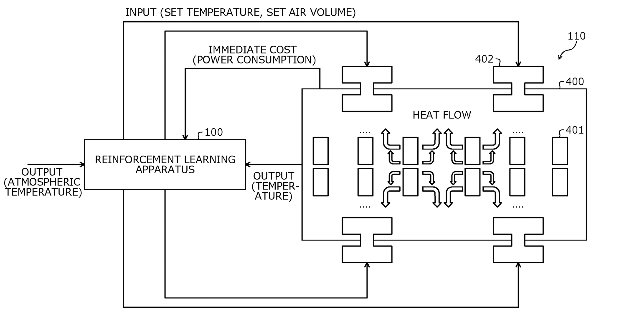

Machine learning-enabled solutions are being adopted by organizations worldwide to enhance customer experience and to gain a competitive edge in business operations. In conventional reinforcement learning, a control law to minimize or maximize a value function that represents an accumulated cost or an accumulated reward is learned based on the immediate cost or the immediate reward given to the controlled object (i.e. temperature control), by means of which an input value for the controlled object is determined. However, the state of the controlled object and immediate cost or the immediate reward corresponding to the input for the controlled object may be unknown. In this case, with the traditional techniques, it is difficult to accurately determine the desired input value for the controlled object. Furthermore, it may be not be feasible to update the feedback coefficient matrix efficiently. Here, we present promising algorithms developed by a group of researchers led by Prof. Kenji Doya. The developed algorithms overcome the above problems and provide a more reliable control scheme.

Applications

- Partially observable control

- Linear quadratic regulation (LQR)

- Server room temperature controller

- Electric generator controller

Advantages

- No need to observe the state of controlled object

- No need to know the immediate cost or reward corresponding to the input to controlled object

Technology

The technology is based on novel reinforcement learning algorithms that clearly define a control policy in a partially observable environment. The defined control policy can be used to efficiently manage environmental conditions. Specifically, to create an effective policy, this method determines coefficients of a value function (e.g. power consumption model) based on past and present inputs (e.g. temperature setting) and outputs (e.g. temperature sensor). Additionally, it provides a way to update feedback coefficients to efficiently minimize the total cost (e.g. accumulated power consumption) in a timely manner.

Media Coverage and Presentations

CONTACT FOR MORE INFORMATION

![]() Graham Garner

Graham Garner

Technology Licensing Section

![]() tls@oist.jp

tls@oist.jp

![]() +81(0)98-966-8937

+81(0)98-966-8937