FY2011 Annual Report

Neural Computation Unit

Professor Kenji Doya

Abstract

The Neural Computation Unit pursues the dual goals of developing robust and flexible learning algorithms and elucidating the brain’s mechanisms for robust and flexible learning. Our specific focus is on how the brain realizes reinforcement learning, in which an agent, biological or artificial, learns novel behaviors in uncertain environments by exploration and reward feedback. We combine top-down, computational approaches and bottom-up, neurobiological approaches to achieve these goals. The major achievements of the three subgroups in this fiscal year 2011 are the following.

a) The Dynamical Systems Group has developed a method for estimating synaptic connections in a local neural circuit from multi-electrode or optical imaging data using Bayesian inference with sparse hierarchical priors. The group started to develop machine learning methods for diagnosis of depression patients using functional MRI and other neurobiological data. The group also started to develop a large-scale spiking neural network model of the basal ganglia to understand the symptoms of Parkinson's disease.

b) The Systems Neurobiology Group revealed through local drug injection and chemical measurement using micro dialysis that the blockade of the forebrain serotonin release impairs the ability of rats to wait for delayed rewards. In collaboration with Prof. Kuhn, the group started two-photon imaging of mice's cortical neurons in order to elucidate the neural substrates of mental simulation.

c) The Adaptive Systems Group developed model-based policy gradient algorith for reinforcement learning and evaluated its performance in a mating task of "Sprind Dog" robots. The group analyzed the polimorphism emerged in a colony of evolving robots and showed that the co-existence of multiple phenotypes is evolutionarily stable. A new robot platform utilzing the computing power and multiple sensors in an Android smart phone was developed by adding two motors with an Arduino board as the interface.

1. Staff

- Dynamical Systems Group

- Dr. Junichiro Yoshimoto, Group Leader

- Dr. Makoto Otsuka, Researcehr

- Naoto Yoshida, Graduate Student

- Systems Neurobiology Group

- Dr. Makoto Ito, Group Leader

- Dr. Akihiro Funamizu, JSPS Research Fellow

- Masato Hoshino, Guest Researcher

- Dr. Katsuhiko Miyazaki, Researcehr

- Dr. Kayoko Miyazaki, Researcher

- Dr. Yu Shimizu, Researcher

- Ryo Shiroishi, Graduate Student

- Alan Rodrigues, Graduate Student

- Adaptive Systems Group

- Dr. Eiji Uchibe, Group Leader

- Dr. Stefan Elfwing, Researcher

- Ken Kinjo, Graduate Student

- Chris Reinke, Graduate Student

- Phillip Thomas, Graduate Student

- Viktor Zhumatiy, Graduate Student

- Research Administrator / Secretary

- Emiko Asato

- Kikuko Matsuo

- Hitomi Shinzato

2. Collaborations

- Theme: Biological model study for learning strategy of behavioral acquisition in the brain

- Type of collaboration: Joint research

- Researchers:

- Masato Hoshino, Honda Research Institute Japan Co., Ltd

- Osamu Shouno, Honda Research Institute Japan Co., Ltd

- Yohane Takeuchi, Honda Research Institute Japan Co., Ltd

- Hiroshi Tsujino, Honda Research Institute Japan Co., Ltd

- Theme: Functional brain imaging study on the brain mechanisms of behavioral learning

- Type of collaboration: Joint research

- Researchers:

- Dr. Mitsuo Kawato, ATR Computational Neuroscience Laboratories

- Dr. Erhan Oztop, ATR Computational Neuroscience Laboratories

- Theme: Study on neural mechanisms of decision making

- Type of collaboration: Joint research

- Researchers:

- Professor, Masamichi Sakagami, Tamagawa University

- Dr. Alan Farmin, Rodrigues, Tamagawa University

3. Activities and Findings

3.1 Dynamical Systems Group

Sparse Bayesian identification of synaptic connectivity from multi-neuronal spike train data [Yoshimoto]

Recent progress in neuronal recording technique allows us to record spike events simultaneously from a majority of neurons in a local circuit. Such rich datasets would allow us not only to assess the information encoded by neurons, but also to uncover the structure of the underlying neural circuit, which is an essential step to understand the neural computation in the network level. For this purpose, we have been developing an algorithm to identify the synaptic connection from multi-neuronal spike train data based on a generalized leaky integrate-and-fire neuron model and sparse Bayesian inference. In this fiscal year, we reinforced theoretical validity of the model and developed a variation of generalized linear models with alpha- and biexponential function kernels for our purpose. The results were presented in two conferences (Yoshimoto & Doya, Neuroinformatics2011; Yoshimoto & Doya, BSJ2011).

Developping diagnosis and prognosis system for depressive disorder based on machine learning [Yoshimoto, Shimizu]

Correlation between specific gene expression and the volume of several brain areas suggest that genetic polymorphism could also be at the root of clinical depression. However, genetic expression and the pathophysiology of the disease are not directly connected. In order to elucidate this relation, an integrative approach is necessary that can take the multitude of symptoms of depression into account. In our study we analyze multidimensional data, including structural and functional MRI data, genetic polymorphism, and behavioral tests, obtained from depression patients to machine learning algorithms in order to identify subtypes of the disease. We investigate how they can be categorized so as to provide a basis for the development standard diagnosis and treatment methods for depression.

In our preliminary investigations, we were able to achieve classification of fMRI data of depressed and healthy subjects with 82.6% accuracy using a binary classification algorithm referred to as LASSO (least absolute shrinkage and selection operator). Figure 1 shows the location of the weights in the brain, which were found to contribute to the identification of depressed subjects (positive weights) and that which were found to contribute to the distinction of healthy subjects (negative weights). These results build the basis for adding further biomedical parameters such as brain volume, serotonin level etc to our classification.

Figure 1: (Upper row) locations that positively contribute to the identification of depressed subject. (Bottom row) locations that are typically activated in healthy subjects.

Large-scale modeling of basal ganglia circuit [Otsuka, Yoshimoto]

The basal ganglia play an important role in motor control and learning, and its dysfunction is associated with movement disorders. The most notable is Parkinson's disease, which involves degeneration of dopaminergic system in the substantia nigra pars compacta (SNc), but little is understood about how the degeneration changes the dynamics in basal ganglia circuit, leading to resting tremor and muscle rigidity. To reveal the mechanism in the system level, we started a new project for constructing a realistic model of the basal ganglia circuit.

In this fiscal year, we surveyed available models of basal ganglia and selected the model proposed by Shouno et al., 2009 as a starting point of the sphisticated model construction due to its biological plausibility and its functional capability of action selection. To ensure an efficient and consistent simulation in parallel computing, we started with porting the original simulation code to the newest version of the NEST simulator, and confirmed the successful reproduction of the results reported in Shouno et al. 2009 (Fig. 2, Left). Analysis of the result revealed the presence of beta-band oscillation in GPe, STN, and GPi even under the absence of external stimulation (Fig. 2, Right), which is different from the expected animal experiments (Fig. 2, Center). This discrepancy between real and simulated experiments has been investigated.

Figure 2: (Left) A profile of neural firing in basal ganglia circuit simulated by the model of Shouno et al. (Center) Firing patterns of neurons in GPi, GPe, and STN measured by simultaneous recording of real neurons in monkey (cited fromTachibana et al. 2011). (Right) A typical firing pattern simulated by the current model.

3.2 Systems Neurobiology Group

Roe of serotonin in actions for delayed rewards [Katsuhiko Miyazaki, Kayoko Miyazaki]

While serotonin is well known to be involved in a variety of psychiatric disorders including depression, schizophrenia, autism, and impulsivity, its role in the normal brain is far from clear despite abundant pharmacology and genetic studies. From the viewpoint of reinforcement learning, we earlier proposed that an important role of serotonin is to regulate the temporal discounting parameter that controls how far future outcome an animal should take into account in making a decision (Doya, Neural Netw, 2002). In order to clarify the role of serotonin in natural behaviors, we performed rat neural recording and microdialysis measurement from the dorsal raphe nucleus, the major source of serotonergic projection to the cortex and the basal ganglia. So far, we found that the level of serotonin release was significantly elevated when rats performed a task working for delayed rewards compared with for immediate reward (Miyazaki et al., Eur J Neurosci, 2011). We also found many serotonin neurons in the dorsal raphe nucleus increased firing rate while the rat stayed at the food or water dispenser in expectation of reward delivery (Miyazaki et al., J Neurosci, 2011). To examine causal relationship between waiting behavior for delayed rewards and serotonin neural activity, 5-HT1A agonist, 8-OH-DPAT was directly injected into the dorsal raphe nucleus to reduce serotonin neural activity by reverse dialysis method. We found that 8-OH-DPAT treatment significantly could not wait for long delayed reward (Miyazaki et al., J Neurosci, 2012). These results suggest that activation of dorsal raphe serotonin neurons is necessary for waiting for delayed rewards. Classic theories suggest that central serotonergic neurons are involved in the behavioral inhibition that is associated with the prediction of negative rewards or punishment. Failed behavioral inhibition can cause impulsive behaviors. However, the behavioral inhibition that results from predicting punishment is not sufficient to explain some forms of impulsive behavior. From our experimental results, we proposed that the forebrain serotonergic system is involved both in “waiting to avoid punishment” for future punishments and “waiting to obtain reward” for future rewards (Miyazaki et al., Mol Neurobiol, 2012). We hypothesize that increase in 5-HT neural activity during waiting for delayed rewards contributes to the regulation of the “waiting to obtain reward”.

Comparison between the rat’s strategy and an optimal strategy for a two-armed bandit task [Makoto Ito]

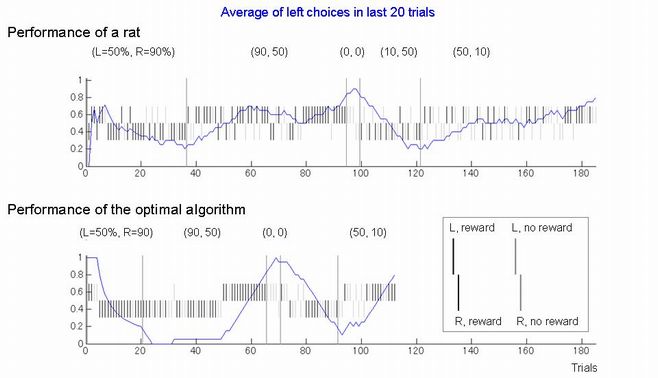

A multi-armed bandit task, which is a slot machine with multiple levers, is an important and popular task for searching the brain mechanisms of the decision making. In a two-armed bandit task, for example, different reward probabilities are assigned to different two actions, for example, 50% for the left action and 90% for the right action. Although it is impossible for animals to know the better option from one trial, they can be able to learn to select the better option after several trials. In our task for rats, the reward probabilities are changed when the rat’s choice reaches 80% optimal so that the rat keeps focusing the change of reward probabilities. In FY2011, we examined whether the performance of rats on the two-armed bandit task is optimized or not. Firstly, we derived an optimal strategy analytically. Interestingly, the derived strategy is equivalent to a method using Bayesian method for estimating the reward probabilities for left and right, and doesn’t use a stochastic exploration unlike reinforcement learning algorithms. Then, we compared the performance of rats with that of the optimal algorithm. The average of the number of trials needed in one block was 41 trials in rats but 23 trials in the optimal algorithm. This result suggests that the choice performance of rats differed considerably from the optimal behavior. However, the optimal algorithm requires a huge memory capacity to store the all past experiences, while the capacity of animals must be limited. It is a future work to consider an optimal strategy assuming a limited memory capacity and to compare it to the performance of rats.

Figure 3:Comparison of the performance between a rat and the optimal algorithm.

Investigation of model-based decision making by two-photon microscopy [Funamizu, collaboration with Dr. Kuhn]

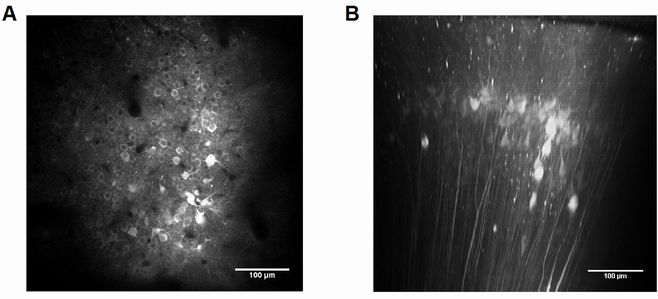

In uncertain and changing environments, we sometimes cannot get enough sensory inputs to know the current context, and therefore must infer the context with limited information to make a decision. We call such internal simulation of context mental simulation. One illustrative example of the mental simulation happens in a game called watermelon cracking, in which a person tries to hit a watermelon far away with his eyes closed with a stick: he needs to estimate his position based on his actions and sensory inputs, e.g., words from others. To investigate the neural substrate of mental simulation, we use two-photon fluorescence imaging in awake behaving mice which will enables us to simultaneously image multiple identified neurons when the mouse conducts a task. In this year, we built a setup to conduct a mental-simulation task of mice and started to build a two-photon microscope. In preliminary experiments, we injected an adeno-associated virus delivering the gene of GCamp3 to barrel cortex. Figure 4B shows the two-photon image of neurons labeled with GCamp3. We plan to conduct functional imaging in awake behaving mice.

Figure 4:In vivo imaging of neurons with two-photon microscopy. We imaged layer 2/3 neurons through a chronic cranial window in the xy-plane (A) and reconstructed the xz-plane (B).

Model-free and model-based action selection mechanisms in the human brain [Rodrigues, Ito, Yoshimoto]

We have previously identified distinct prefrontal-basal ganglia and cerebellar networks that are each predominantly engaged in action selection depending on the level of experience of a human with a given task, such as when subjects are learning to make decision from scratch, using prior knowledge for planning future behaviors, or repeating well learned actions. We are extended this work to identify which computational strategy humans use for action selection. As a candidate framework we are using Reinforcement Learning, which is a computational theory of adaptive optimal control, and a model-based analysis method was developed with the support of Professor Kenji Doya and Makoto Ito of the Neural Computation Unit, OIST. The work resumed with the writing or a journal paper.

The computational principle of flexible learning of action sequences [Hoshino]

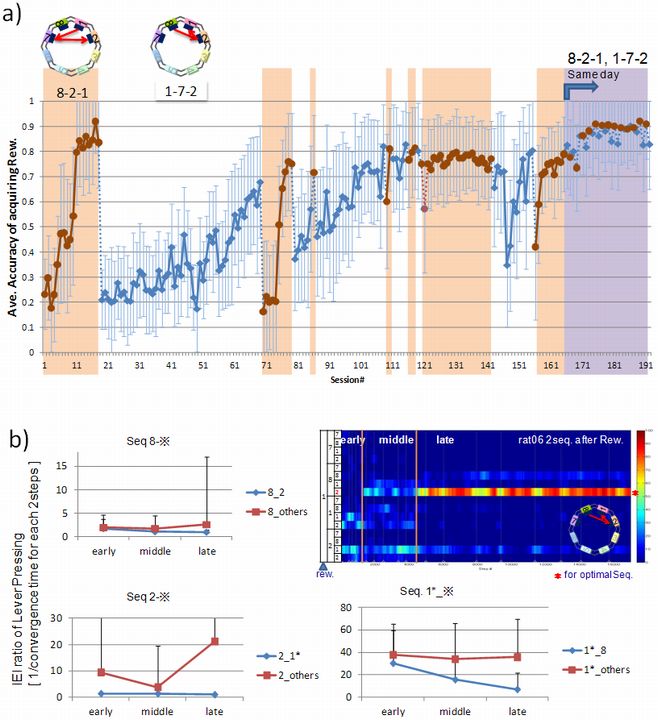

When we apply machine learning techniques to robotic intelligence, the major challenge is huge uncertainty in the environment. In order to solve these problems, we investigate how our brain flexibly selects the right learning algorithms and parameters for motor sequence learning without any prior knowledge of correct sequence or task constraints. We developed a motor sequence learning task in which rats learn to press levers on the walls of an octagonal operant chamber from intracranial self-stimulation reward only after the completion of the entire sequence.

Figure 5:Performance for multiple sequence and time-series feature of lever pressing actions. (a) the rat could remember multiple sequences. [1skip&adjoining, 1skip&2skip] (b) Actions relating to optimal sequence became slightly faster and other false pressings became slower. [correct response with struggling ⇒ false with thinking.]

3.3 Adaptive Systems Group

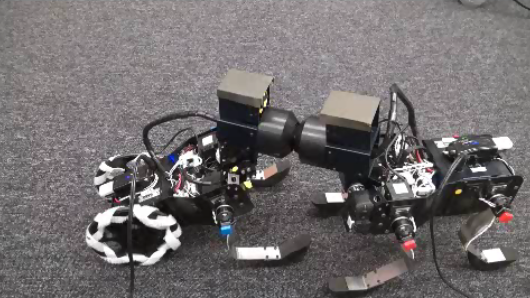

Mating behavior by model-based policy gradient [Uchibe]

Realizing mating behaviors in Spring Dog robots is critical for conducting evolutionary experiments in our robotic platform. In this study, we developed a policy gradient reinforcement learning utilizing inaccurate forward model and differential reward function. In general, it is difficult to prepare or learn a precise forward model of the real robot systems, but we assume that the forward dynamics is roughly approximated by an inaccurate linear model, which is possible to be learned very quickly. The learned forward model enables the policy gradient reinforcement learning to exploit the gradient of the reward function and it accelerates the learning efficiently. To test this method, we implement learning mating behaviors between the wheeled-type and the legged-type Spring Dogs. Since Spring Dog has a physical connector on the head, two robots must control the head position cooperatively in order to communicate with each other. Preliminary experiments show that two robots can kiss each other successfully after learning.

Figure 6:Mating behavior by two Spring Dogs

Robot evolution of polymorphism [Elfwing, Uchibe]

Polymorphism has fascinated evolutionary biologist since the time of Darwin. Biologist have observed discrete alternative mating strategies in many different species, for both male and females. In this study, we demonstrate that polymorphic mating strategies can emerge in a small colony with hermaphroditic robots, using our earlier proposed framework for performing robot embodied evolution with a limited population size. We used a survival task where the robots maintained their energy levels by capturing energy sources and physically exchanged genotypes for the reproduction of offspring. The reproductive success was dependent on the individuals’ energy levels, which created a natural trade-off between the time invested in maintaining a high energy level and the time invested in attracting potential mating partners. In the experiments, two types of mating strategies emerged: 1) roamers, who never waited for a potential mating partner and 2) stayers, who waited for potential mating and where the waiting threshold depended on their current energy level and sensory inputs. In a majority of the experiments, the emerged populations consisted of individuals executing only one mating strategy. However, in a minority of the simulations, there emerged a evolutionarily stable polymorphic population of roamers and stayers. In one instance, the stayers were split into two subpopulations: stayers who almost always waited for potential mating partners (strong stayers), and stayers who only waited if the energy level was high and an energy source was close (weak stayers). Our analyses show that a population consisting of three phenotypes also could constitute a globally stable polymorphic ESS with several attractors.

Figure 7: DiFinetti diagram of the directions and magnitudes of the changes in subpopulation proportions for the three phenotypes, strong stayers (SS), weak stayers (WS), and roamers (R). The small blue circles indicate the tested phenotype proportions. The red

arrows show the average direction and magnitude of the change in phenotype proportions (magnified by a factor of five for visualization purposes). The green line, starting in the black circle and ending in the black triangle, shows the phenotype proportions in final 20 generations of the evolutionary experiments. The gray-scale coloring visualizes the average population fitness.

Linearly solvable MDPs with model learning [Kinjo, Uchibe]

In general, we have to solve a nonlinear Hamilton-Jacobi-Bellman (HJB) equation in order to design an optimal controller. We have been interested in the approach proposed by Emanuel Todorov and his colleague because it enables us to solve the HJB equation efficiently. In their approach, they linearize the HJB equation by introducing a constraint on the cost function and an exponential transformation of the objective function. However, their method has been tested in a simple computer simulation and it is assumed that the forward model is given to the learning algorithm. Therefore, we combine their approach with model learning and validate the combined method using our robot called Spring Dog. The task is to approach the battery pack placed in the environment. At first, Spring Dog collects the sequence of observation and motor command by exploring the environment in order to construct the forward model. Then, the optimal controller is derived using the Todorov’s method and apply it to control the robot. Experimental results show that Spring Dog learns approaching behaviors successfully using the proposed method.

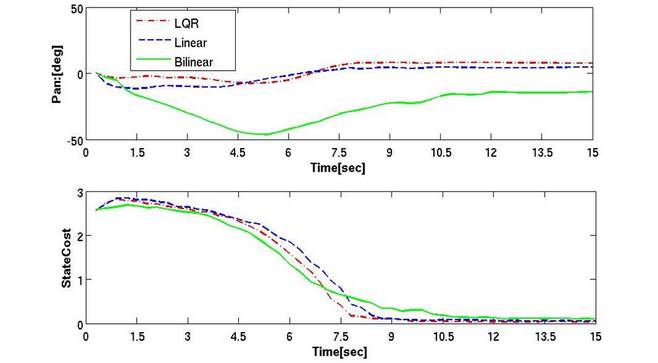

Figure 8: Peformance comparison between LMDPs and conventional LQR. The blue and red dotted lines show the results of the linearly solvable MDP (LMDP) with the linear forward model and bilinear model, respectively.

Development of smartphone robot platform [Yoshida, Uchibe]

Recent smartphones have high computation performance and various sensors in the small body. Therefore various applications of smartphones are proposed in commercial and academic studies. This study proposes and develops a new robot platform using a smartphone, called Smart Phone Robot, aiming the development platform of the home robot in the future. To achieve this goal, we design a new hardware system for the smartphone robot and propose software architecture necessary for the control application of the robot. We run the control application on the real system in order to evaluate the real-time performance. Next, we develop a two-wheeled self-balancing robot using the architecture of the smart phone robot. We show that the smart phone can actually control the balancing robot while executing face recognition using the onboard vision system.

4. Publications

4.1 Journals

- Funamizu, A., Ito, M., Doya, K., Kanzaki, R. & Takahashi, H. Uncertainty in action-value estimation affects both action choice and learning rate of the choice behaviors of rats. European Journal of Neuroscience 35, 1180-1189 (2012)

- Ito, M. & Doya, K. Multiple representations and algorithms for reinforcement learning in the cortico-basal ganglia circuit. Curr Opin Neurobiol, doi:S0959-4388(11)00046-8 [pii]10.1016/j.conb.2011.04.001 (2011).

- Miyazaki K, Miyazaki K.W. & Doya, K. The role of serotonin in the regulation of patience and impulsivity, Molecular Neurobiology, 2012,45(2):213-24. Epub 2012 Jan 20. (2012)

- Pammi VS, Miyapuram KP, Ahmed, Samejima K, Bapi RS & Doya, K. Changing the structure of complex visuo-motor sequences selectively activates the fronto-parietal network. NeuroImage (2011).

- Yoshimoto, J., Sato, M. A. & Ishii, S. Bayesian Normalized Gaussian Network and Hierarchical Model Selection Method. Intell Autom Soft Co 17, 71-94 (2011).

4.2 Books and other one-time publications

- Uchibe, E. & Doya, K. Evolution of rewards and learning mechanisms in Cyber Rodents. in Neuromorphic and Brain-Based Robots 109-128 (Cambridge University Press, Cambridge, 2011)

4.3 Oral and Poster Presentations

- Doya, K. Neural Mechanisms of Reinforcement Learning (Japanese), Lecture at University of Tokyo, Tokyo, June 7, 2011

- Doya, K. Neural Mechanisms of Reinforcement Learning (Japanese), Lectuer at Kyoto University, Kyoto, June 8, 2011

- Doya, K. Toward building novel neuroinformatic basis (Japanese), Strategic Research Program for Brain Science, 2nd Workshop, Osaka, June 21 2011

- Doya, K. Selection of state encoding, algorithms, and parameters in reinforcement learning, Okinawa Computational Neuroscience Course 2011, Okinawa, June 27, 2011, 2011

- Doya, K. Reinforcement Learning, Bayesian inference and brain science (Japanese), Kyoto University Lecture, August 24, 2011

- Doya, K. Neural Mechanisms of Reinforcement Learning: present situation and future prospects (Japanese), Lecture at Toyota Central R&D Lab's, Nagakute-cho, September 13, 2011

- Doya, K., Miyazaki, K. W. & Miyazaki, K. Serotonin activity and the regulation of impulsivity, Japanese Alcohol, Nicotine & Drug Addiction Conference 2011, Nagoya, Aichi, October 13-15, 2011

- Doya, K. Needs for molecular neuroanatomy in computational neuroscience of reimforcement learning, A course in Molecular Neuroanatomy, Onna, Okinawa, October 20, 2011

- Doya, K. Reimforcement learning, basal ganglia, and neuromodulation, Lecuter at NAIST, Ikoma, Nara, October 28, 2011

- Doya, K. How the brain makes decisions, Symposium for Dr Brenner, Onna, Okinawa, November 19, 2011

- Doya, K. Parallelism of multiple decision strategies in the cortico-basal ganglia networks, The 11th Workshop on Neurobiology and Neuroinformatics (NBNI 2011), Onna, Okinawa, December 19, 2011

- Doya, K. Toward real-time simulation of the visual-oculomotor system (Japanese), SLiM Reporting Meeting 2011, Tokyo, December 21-22, 2011

- Funamizu, A., Ito, M., Doya, K., Kanzaki, R. & Takahashi, H. Uncertainty-seeking in rats' choice behaviors and its dependence on reward context, 34th Annual Meeting of the Japan Neuroscience Society, Yokohama, September 16, 2011

- Funamizu, A., Ito, M., Doya, K., Kanzaki, R. & Takahashi, H. Task-dependent uncertainty preference of rats in a free choice task, 21st Annual Conference of the Japanese Neural Network Society, Okinawa, Japan, December 15, 2011

- Funamizu, A., KKuhn, B. & Doya, K. Proposal for investigation of model-based decision making bu two-photon microscopy, 12th Winter Workshop on the Mechanism of Brain and Mind, Hokkaido, Japan, Jenuary 15, 2012, 2012

- Ito, M. & Doya, K. Neural coding of value-based and finite state-based decision strategies in the dorsal and ventral striatum, 34th Annual Conference of the Japanese Neural Network Society, Yokohama, September 16, 2011

- Ito, M. Algorithms of decision making based on reward and neuronal representation in the striatum, Hominization workshop, Inuyama, Aichi, March 15-16, 2012

- Miyazaki, K. W., Miyazaki, K. & Doya, K. Activation of dorsal raphe serotonin neurons is necessary for waiting for delayed rewards, 34th Annual Conference of the Japanese Neural Network Society, Yokohama, September 16, 2011

- Otsuka, M., Yoshimoto, J., Elfwing, S., Doya, K. Solving POMDPs using restricted Boltzmann machines with echo state networks (Japanese), IEICE Neurocomputing Workshop, Okinawa, June 24, 2011

- Otsuka, M., Yoshimoto, J., Doya, K. Hierarchical Integrated Simulation for Predictive Medicine Sub Theme B:Integrated simulation of the whole-body musculoskeletal-nervous system for clarification of motor dysfunctions due to neurological diseases (Japanese), RIKEN HPCI General Meeting, Wako, Saitama, December 4, 2011

- Yoshimoto, J. & Doya, K. Model-based identi cation of synaptic connectivity from multi-neuronal spike train data (Japanese), 25th Bioinformatics Workshop, Information Processing Society of Japan, Nishihara, Okinawa June 23-24, 2011

- Yoshimoto, J. & Doya, K. Sparse Bayesian identification of synaptic connectivity from multi-neuronal spike train data, Neuroinformatics 2011, Boston, USA, September 4-6, 2011

- Yoshimoto, J. & Doya, K. A statistical learning method for identifying synaptic connections from spike train data, The 49th Annual Meeting of the Biophysical Society of Japan, Himeji, Japan, September 16-18, 2011

- Yoshimoto, J. Identification of synaptic connectivity from multi-neuronal spike trains based on MAT model and hierarchical Bayesian inference (Japanese), ISM Workshop on Interaction between Neuroscience and Statistical Science,The Institute of Statistical Mathematics, Tachikawa, Tokyo, December 26-27, 2011

5. Intellectual Property Rights and Other Specific Achievements

Nothing to report

6. Meetings and Events

6.1 Seminar

- Date: August 30, 2011

- Venue: OIST Campus Lab1

- Speakers:

- Dr. Yuki Sakai (Graduate School of Medical Science, Kyoto Prefectural University of Medicine)

- Dr. Jin Narumoto (Graduate School of Medical Science, Kyoto Prefectural University of Medicine)

- Title: Aberrant basal ganglia circuitry of patients with Obsessive- Compulsive Disorder

6.2 Seminar

- Date: October 3, 2011

- Venue: OIST Campus Lab1

- Speaker:

- Dr. Kazuyuki Samejima (Brain Science Institute, Tamagawa University)

- Title: The neural activities in the rostral-striatum during the cognitive decision-making

6.3 Seminar

- Date: October 12, 2011

- Venue: OIST Campus Center Building

- Speaker:

- Dr. Sean Hill (International Neuroinformatics Coordinating Facility, Karolinska Institutet)

- Title: Toward a global infrastructure for neuroscience data sharing and integration

6.4 Seminar

- Date: November 2, 2011

- Venue: OIST Campus Lab1

- Speaker: Dr. Michael Lazarus (Osaka Bioscience Institute)

- Title: The role of adenosine A2A receptors in the nucleus accumbens for sleep-wake regulation

- Speaker: Dr. Yoshihiro Urade (Osaka Bioscience Institute)

-

Title: Humoral and neural regulation of sleep - Lesson from prostaglandin D2-induced sleep

6.5 Satellite Symposium of the JNNS2011 : Young researcher's Symposium

- Date: December 14-15, 2011

- Venue: OIST Seaside Hosue

- Organizer: Society for Young researchers on Neuroscience

- Sponsor: OIST

- Speakers:

- Dr. Peter Dayan(University College London)

- Dr. Jeff Wickens (OIST)

- Dr. Mitsuo Kawato (ATR)

6.6 The 21st Annual Conference of the Japan Neural Network Society (JNNS2011)

- Date: December 15-17, 2011

- Venue: OIST Campus Center Building

- Sponsor: Japan Neural Network Society

- Cosponsor: OIST

- Cooperation:

- Japanese Neuroscience Society

- Institute of Electronics, Infromation, and Communication Engineers

- Society of Instrument and Control Engineers

- The Institute of Electrical Engineers of Japan

- Fuzzy Logic Systems Institute

- Japan Society for Fuzzy Theory and Intelligent Informatics

- The Robotic Society of Japan

- Japanese Cognitive Science Society

- Information Processing Society of Japan

- Supported by Grant-in-Aid for Scientific Research on Innovative Areas: Prediction and Decision Making

- Keynote speakers:

- Peter Dayan (University College London)

- Richard Sutton (University of Alberta)

- 25 Oral presentations

- 108 Poster presentations

6.7 The 11th Workshop on Neurobiology and Neuroinformatics (NBNI 2011)

- Venue: OIST Seaside House

- Sponsor: Japan Neural Network Society

- Cosponsor:

- OIST

- Comprehensive Brain Science Network (CBSN)

- Speakers:

- Shigang He (Institute of Biophysics, Chinese Academy of Sciences)

- Zhihua Wu (Institute of Biophysics, Chinese Academy of Sciences)

- Jiulin Du (Institute of Neuroscience, Chinese Academy of Sciences)

- Xiaohui Zhang (Institute of Neuroscience, Chinese Academy of Sciences)

- Liqing Zhang (Shanghai Jiaotong University)

- K. Y. Michael Wong (Hong Kong University of Science & Technology)

- Jong C. Ye (KAIST)

- Jong-Hwan Lee (Korea University)

- Seunghwan Kim (Postech)

- Seul-Ki Yeom, Heung-Il Suk, and Seong-Whan Lee (Korea University)

- Minho Lee (Kyungpook National University)

- Suh-Yeon Dong and Soo-Young Lee (KAIST)

- Kenji Doya (OIST)

- Tadashi Isa (NIPS)

- Jun Morimoto (ATR)

- Masafumi Oizumi (RIKEN BSI)

- Kukjin Kang (RIKEN BSI)

6.8 Seminar

- Date: March 19, 2012

- Venue: OIST Campus Lab1

- Speaker: Dr. Takakuni Goto (Tohoku University)

- Title: A Volumetric Current Source Density Analysis with Realistic Geometrical Property and Conductivity Profile in Somatosensory Cortex of Rats

7. Other

Nothing to report.