Robotic Control Using Direct Inverse Reinforcement Learning (No. 0061, 0097)

|

|

<< Back to all technologies |

Summary

Robotic control algorithm based on direct inverse reinforcement learning with density ratio estimation.

The global artificial intelligence market size is projected to reach USD 299 billion by 2026 at a CAGR of 35% over the forecast period. Artificial intelligence is the science and technological progress that is consumed in order to give computer systems the ability to attain problem-solving skills as humans with the aid of learning. Human intelligence is a combination of present consciousness and emotionality while AI attempts to emulate the latter using complex mathematical astute. Understanding behaviors of humans from observation is very crucial for developing artificial systems that can interact with human beings. Since human decision making processes are influenced by rewards/costs associated with selected actions, the problem can be formulated as an estimation of the rewards/costs from observed behaviors. However, one of the critical problems is how to design and prepare an appropriate reward/cost function. The known algorithms are usually very time-consuming even when the model of the environment is available. Here, we present a promising robotic control algorithm developed by a group of researchers led by Prof. Kenji Doya. The developed algorithm overcomes the above problems and provides a more reliable and quick control scheme.

Applications

- Robotic Control

- Analysis of Web Experience – Like Predictions

- Imitation Learning

Advantages

- Model-Free Method – No need to know environmental dynamics

- Data Efficient – Easier to collect data

- Computationally Efficient - Fast

Technology

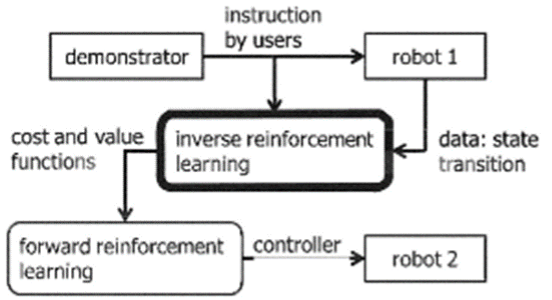

The novel algorithm includes two components: (1) learning the ratio of state transition probabilities with and without control by density ratio estimation and (2) estimation of the cost and value functions that are compatible with the ratio of transition probabilities by a regularized least squares method. By the use of efficient algorithms for each step, the algorithm is more efficient in data and computation than other inverse reinforcement learning methods. For robot implementation, the demonstrator controls a robot to accomplish a task and the sequence of states and actions is recorded. Then an inverse reinforcement learning algorithm estimates the cost and value functions, which are then given to forward reinforcement learning controllers for different robots.

Media Coverage and Presentations

![]() JST Technology Showcase Presentation (JP Only)

JST Technology Showcase Presentation (JP Only)

![]() JST Technology Showcase Presentation Slides (JP Only)

JST Technology Showcase Presentation Slides (JP Only)

CONTACT FOR MORE INFORMATION

![]() Technology Licensing Section

Technology Licensing Section

OIST Innovation

![]() tls@oist.jp

tls@oist.jp

![]() +81(0)98-966-8937

+81(0)98-966-8937