Computing resources

We provide two HPC clusters, three data storage systems, and a tape based archive/backup system to support the OIST research community in your scientific computing activities, and to store your research data (see our page on Research storage at OIST). HPC clusters and the large data storage systems are hosted inside the campus at the Onna village site, whereas the archive and backup system is located in a dedicated facility at Nago city.

HPC Clusters

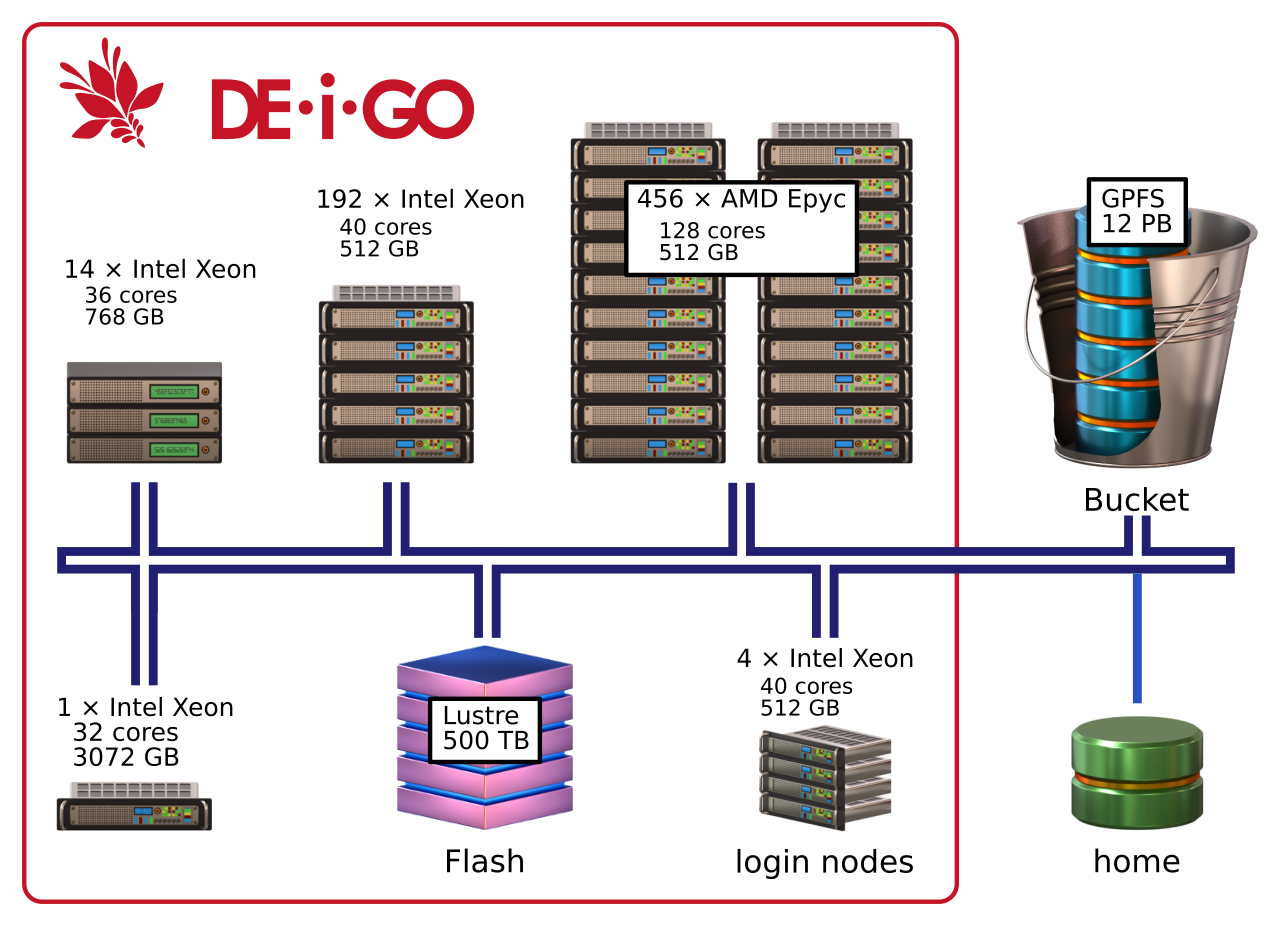

We operate two high performance computing (HPC) clusters, Deigo and Saion. which are located in the main campus data center. Deigo is the current main research computing cluster and consists of 66584 compute cores (processor units) with a total of 337 TByte (tera-bytes) of memory.

Saion is a cluster for accellerator-based computing, and currently houses 32 GPU compute nodes equipped with 4 NVIDIA P100 and V100 GPUs; 4 nodes each with 8 A100 GPUs; and a small number of CPU nodes for data postprocessing. It is especially well suited for image processing and deep-learning related computing.

Deigo

Deigo has two types of nodes, with CPUs from AMD and from Intel. The main AMD nodes have dual AMD Epyc 7702 CPUs with 128 cores and 512GB memory. The secondary Intel nodes have dual Xeon Gold 6230 CPUs with 40 cores and 512GB memory. The system also houses a number of nodes with fewer cores and more memory, as well as nodes for data copying and other tasks.

The working storage (called "Flash") is a 500TB SSD Lustre system from DDN. It is a high-performance read-write temporary storage system that you can access very fast from the compute nodes. Each research unit has a non-expandable 10TB allocation.

Saion

Saion has a mix of hardware types. The main GPU nodes have dual Intel CPUs, with either four NVIDIA Tesla P100 or four Tesla V100 GPUs. Each host node has 36 cores and 512G memory, and the GPUs have 16G memory each. We have another 4 GPU nodes, each with 128 core AMD CPUs, 2 TB memory and 8 A100-SXM4 GPUs with 80GB memory each.

In addition there are four CPU nodes with dual Intel Xeon Gold 6148 CPUs with a total of 40 cores and 512GB memory for postprocessing and testing.

The compute storage is a 200TB SSD (solid state) storage system, with a non-expandable 10TB per unit allocation.

Software

We have a large collection of both free and licensed software on the clusters. You are welcome to build and install your own software, but if you can, we recommended that you use the software we provide on the cluster. You can read about how to use the installed software here. Very briefly:

module avail gives you a list of the available software, and module whatis gives you a full list with descriptions:

$ module avail

$ module whatisYou load a piece of software with module load:

$ module load meraculousThe page on using the module system will give you much more detail on using software on the clusters.

File systems

We provide research data storage that you can access both from your workstation or laptop, and from the HPC clusters. The data storage can be used with the HPC clusters through the following file systems.

Parallel File Systems (High performance):

The high-performance file systems are used during computations. They are very fast, but have a limited size and are not backed up.

| System | Capacity | Allocation | Mounted on |

|---|---|---|---|

| Deigo Flash | 500TB | 10TB/unit | /flash (Deigo), /deigo-flash (Saion) |

| Saion Work | 200TB | 10TB/Unit | /work (Saion), /saion-work (Deigo) |

NFS File Systems:

This storage is intended for long-term storage of data and results; or for your personal configuration files, source code and so on.

| Filesystem | Size | Mounted on |

|---|---|---|

bucket |

60PB | /bucket (read-only from compute nodes) |

home |

8.0TB | /home (accessible from all nodes) |

Use the /flash or /work partition for all your computing work. You have access to your unit directory on the parallel high-performance file servers. These system is much faster than your home directory, and can handle very large amounts of data.

Use /bucket for long-term storage of research data. It is high-capacity and is backed-up, with an off-site backup in Nago city. Bucket is read-only on the computing nodes, so read data from bucket, do your computing on /flash or /work, then move the results back to bucket at the end. If you need to move very large amounts of data, you can use the special datacp partition on Deigo.

Use your /home only for documents, configuration files, source code and such things. The file system is quite slow and storage space is limited so do not use it for any computation. It is also not backed up, so please use bucket to store anything important.