FY2023 Annual Report

Neural Coding and Brain Computing Unit

Professor Tomoki Fukai

Abstract

1. Staff

- Staff Scientist

- Tatsuya Haga, PhD (~May 2023)

- Postdoctoral Scholar

- Ibrahim Alsolami, PhD

- Milena Menezes Carvalho, PhD

- Daniel Müller-Komorowska, PhD

- Tomas Barta, PhD

- Ph.D. Student

- Thomas Burns (~Aug 2023)

- Gaston Sivori

- Roman Koshkin

- Munenori Takaku

- Hugo Musset

- Balashwethan Chockalingam

- Rotation Student

- Shuma Oya (Term 3)

- Temma Fujishige (Term 3)

- Dmitrii Shipkov (Term 1)

- Jiajun Chen (Term 2)

- Research Intern

- Hikaru Endo (May-Jul 2023)

- Research Unit Administrator

- Kiyoko Yamada

2. Collaborations

2.1 "Idling Brain" project

kaken.nii.ac.jp/en/grant/KAKENHI-PROJECT-18H05213

- Supported by Grant-in-Aid for Specially Promoted Research (KAKENHI)

- Type of collaboration: Joint research

- Researchers:

- Professor Kaoru Inokuchi, University of Toyama

- Dr. Khaled Ghandour, University of Toyama

- Dr. Noriaki Ohkawa, University of Toyama

- Dr. Masanori Nomoto, University of Toyama

2.2 Chunking sensorimotor sequences in human EEG

- Type of collaboration: Joint research

- Researchers:

- Professor Keiichi Kitajo, National Institute for Physiological Sciences

- Dr. Yoshiyuki Kashiwase, OMRON Co. Ltd.

- Dr. Shunsuke Takagi, OMRON Co. Ltd.

- Dr. Masanori Hashikaze, OMRON Co. Ltd.

2.3 Hippocampal Helmholtz machine

- Type of collaboration: Internal joint research

- Researchers:

- Tom M George, internship student, UCL

- Professor Kimberly Stachenfeld, Google DeepMind

- Professor Caswell Barry, UCL

- Professor Claudia Clopath, ICL

2.4 Analysis of behavioral manifolds of song learning

- Type of collaboration: Internal joint research

- Researchers:

- Professor Yoko Yazaki-Sugiyama, OIST

- Dr. Ibrahim Alsolami, OIST

- Yaroslav Koborov, PhD student, OIST

2.5 Neuromorphic devices for sequence learning

- Type of collaboration: Internal joint research

- Researchers:

- Yoshifumi Nishi, Toshiba Co. Research and Development Center

- Kuniko Nomura, Toshiba Co. Research and Development Center

- Gaston Sivori, PhD student, OIST

2.6 Decision-making through rule inference

- Type of collaboration: Joint research

- Researchers:

- Haruki Takahashi, PhD student, Kogakuin University

- Prof. Takashi Takekawa, Kogakuin University

- Prof. Yutaka Sakai, Tamagawa University

3. Activities and Findings

3.1 A thata-driven generative model of the hippocampal formation

Tom M George, Kimberly Stachenfeld, Caswell Barry, Claudia Clopath and Tomoki Fukai. NeurIPS 2023.

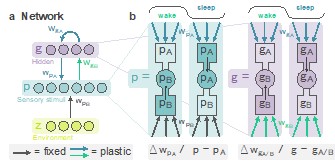

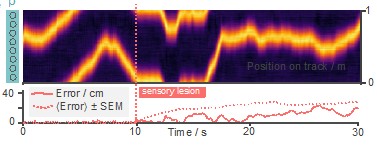

Generative models have long been thought fundamental to animal intelligence, whereas advances in these models have recently revolutionised machine learning. In animals, the hippocampal formation is thought to learn and use a generative model to support its role in spatial and non-spatial memory. In this study, we proposed a biologically plausible model of the hippocampal formation tantamount to a Helmholtz machine that we apply to a temporal stream of inputs. In this model, fast theta-band oscillations (5-10 Hz) gate the direction of information flow throughout the network, training it akin to a high-frequency wake-sleep algorithm (Fig.1a, b). Our model accurately infers the latent state of high-dimensional sensory environments and generates realistic sensory predictions. Furthermore, it can learn to path integrate by developing a ring attractor connectivity structure matching previous theoretical proposals and flexibly transfer this structure between environments (Fig. 1c). Whereas many models trade-off biological plausibility with generality, our model captures a variety of hippocampal cognitive functions under one biologically plausible local learning rule. These results shed light on the relationship between biological and artificial intelligence.

c

Fig. 1: A biologically plausible generative model is trained with theta frequency wake-sleep cycles and a local learning rule. (a) Network schematic: high-D stimuli from an underlying environmental latent, z, arrive at the basal dendrites of the sensory layer, p, and map to the hidden layer, g (this is the inference model, weights in green). Simultaneously, top-down predictions from the hidden layer g arrive at the apical dendrites of p (this is the generative model, weights in blue). (b) Neurons in layers p and g have three compartments. A fast oscillation gates which dendritic compartment – basal (pB, gB) or apical (pA, gA) – drives the soma. A local learning rule adjusts input weights to minimise the prediction error between dendritic compartments and the soma. (c) Path integration ability is demonstrated in a lesion study: after 10 seconds in the normal oscillatory mode the network is placed into sleep mode (aka generative mode), lesioning the position-dependent sensory inputs. Despite this HPC continues to accurately encode position, evidence that the MEC ring attractor is path integrating the velocity inputs and sending predictions back to HPC. Lower panel shows the accumulated decoding error as well as the mean±SEM over 50 trials.

3.2 Discriminating interfering memories through presynaptic competition

Chi Chung Alan Fung and Tomoki Fukai (2023), PNAS Nexus, 2: pgad161, DOI: https://doi.org/10.1093

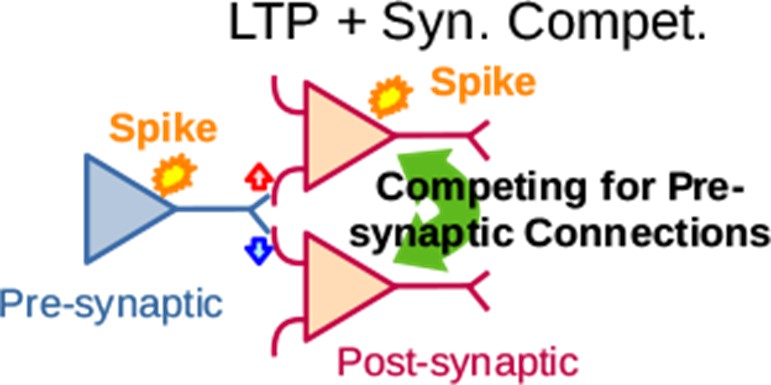

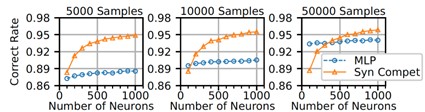

Evidence suggests that hippocampal adult neurogenesis is critical for discriminating considerably interfering memories. During adult neurogenesis, synaptic competition modifies the weights of synaptic connections nonlocally across neurons, providing a different form of Hebb’s local plasticity rule. In this study, we demonstrated in a computational model how synaptic competition separates similar memory patterns, at which attractor neural networks can only do a poor job. In synaptic competition, adult-born neurons are integrated into the existing neuronal pool by competing with mature neurons for synaptic connections from the entorhinal cortex. Our model's novelty is the implementation of synaptic competition between presynaptic terminals (Fig. 2a). We show that synaptic competition and neuronal maturation play distinct roles in separating interfering memory patterns. When the number of samples is small, our feedforward neural network model trained by a competition-based learning rule outperformed a multilayer perceptron trained by the backpropagation algorithm (Fig. 2b). These results highlighted the contribution of synaptic competition in pattern separation.

a

b

Fig. 2: Presynaptic competition of synapses. (a) LTP is combined with synaptic competition in which synapses compete for presynaptic resources. (b) Performance test on a real-world classification task. Correct rates of the competition-based model and MLP. The two networks were trained with 5,000 (C), 10,000 (D), and 50,000 (E) samples from the MNIST data set.

3.3 Inherent trade-off in noisy neural communication with rank-order coding

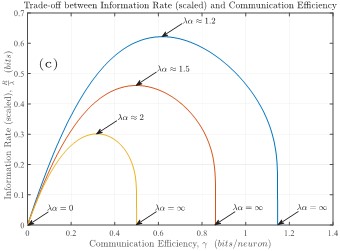

Ibrahim Alsolami and Tomoki Fukai, Phys. Rev. Research 6, L012009 Jan 16, 2024

A possible advantage of temporal coding over rate coding is the speed of signal processing as the former does not require time-consuming temporal integration of output spikes. Rank-order coding is a form of temporal coding that has emerged as a promising scheme to explain the rapid ability of the mammalian brain. This coding scheme hypothesizes that the earliest arriving spikes determine the preferred stimuli of individual sensory neurons. While rank-order coding is increasingly gaining interest in diverse research areas beyond neuroscience, much uncertainty still exists about the performance of rank-order coding under noise. In this study, we showed what information rates are fundamentally possible and what trade-offs are at stake. An unexpected finding is the emergence of a special class of errors that, in a regime, increase with less noise. This finding led us to a trade-off inherent in rank-order coding between information rate and communication efficiency.

Fig. 3: Performance of rank-order coding. The trade-off between information rates R and efficiency are shown. The parameters 1/λ and α refer to the average of exponentially distributed spike time jitters and the average interspike interval of presynaptic spikes, respectively. Here, we plot the scaled version of R (that is, Rλ) rather than R as it eliminates the need to display R for various combinations of λ and α.

4. Publications

4.1 Journals

- Ibrahim Alsolami and Tomoki Fukai. (2024) Inherent Trade-Off in Noisy Neural Communication with Rank-Order Coding. Phys. Rev. Research, 6, L012009, DOI: https://doi.org/10.1103/PhysRevResearch.6.L012009

- Chi Chung Alan Fung and Tomoki Fukai (2023) Competition on presynaptic resources enhances the discrimination of interfering memories, PNAS Nexus, pgad161, DOI: https://doi.org/10.1093/pnasnexus/pgad161

4.2 Books and other one-time publications

- Tomoki Fukai. (2024)〈指定討論〉脳の記憶の特徴は多階層性と動的安定性?(Japanese), 岩波書店 科学, 2024年2月号 (Vol.94 No.2).

- Thomas Burns. (2023) Does inhibitory (dys)function account for involuntary autobiographical memory and déjà vu experience? Behavioral and Brain Sciences, 46:E360.

- Thomas Burns. (2023) Epistemic virtues of harnessing rigorous machine learning systems in ethically sensitive domains. Journal of Medical Ethics, 49:536-540.

4.3 Oral and Poster Presentations

Oral Presentations (*invited)

- Toshitake Asabuki and Tomoki Fukai*. Learning optimal models of statistical events in spontaneous neural activity. [Minisymposium] Mathematical modeling, analysis, and simulation for complex neural systems, The 10th International Congress on Industrial and Applied Mathematics (ICAM2023), Tokyo, Japan (August 25, 2023).

Poster Presentations

- Gaston Sivori and Tomoki Fukai. Few-shot temporal pattern learning via spike-triggered boosting of somato-dendritic coupling. Cosyne 2024, Lisbon, Portugal (February 29- March 3, 2024).

- Tom M George, Kimberly Stachenfeld, Caswell Barry, Claudia Clopath and Tomoki Fukai. A generative model of the hippocampal formation trained with theta driven local learning rules. The 37th Conference on Neural Information Processing Systems (NeurIPS 2023), New Orleans, USA (December 10-16, 2023).

- Daniel Muller-Komorowska and Tomoki Fukai. Feedforward and feedback inhibition support classification differently in a spiking neuronal network. Society for Neuroscience Annual Meeting (Neuroscience 2023), Washington D.C., USA (November 11-15, 2023).

- Thomas F Burns and Tomoki Fukai. Simplicial Hopfield networks. International Conference on Learning Representations (ICLR 2023), Kigali, Rwanda (May 1-5, 2023).

- Roman Koshkin and Tomoki Fukai. Unsupervised Detection of Cell Assemblies with Graph Neural Networks. International Conference on Learning Representations (ICLR 2023), Kigali, Rwanda (May 1-5, 2023).

4.4 Seminars (all invited)

- Tomoki Fukai. 基底核と海馬、意外な理論的関係性, 京都大学ヒト行動進化研究センター国際脳科学シンポジウム International Symposium on Brain Science - Messages to the Next Generation ‒, Aichi, Japan (March 17, 2024).

- Tomoki Fukai. Graphical information representations in associative memory models. ipi Seminar, Institute for Physics of Intelligence, The University of Tokyo, Tokyo, Japan (December 27, 2023).

- Tomoki Fukai. 機械学習 ✕ 脳科学:学習と記憶のメカニズムから, Autumn School for Computational Neuroscience (ASCONE2023), Chiba, Japan, (November 28, 2023).

- Tomoki Fukai. Special lecture on computational neuroscience, The University of Tokyo, Tokyo, Japan (November 20-21, 2023)

- Tomoki Fukai. AI と脳の交差点, Teikyo University, The Advanced Comprehensive Research Organization (ACRO) Tokyo, Japan (June 23, 2023).

5. Intellectual Property Rights and Other Specific Achievements

5.1 Grants

Grant-in-Aid for Scientific Research (S)

- Titile: Working principle of the idling brain

- Period: FY2023-2028

- Principal Investigator: Prof. Kaoru Inokuchi (University of Toyama)

- Co-Investigators: Prof. Tomoki Fukai

Kakenhi Graint-in-Aid for Early-Career Scientists

- Titile: A Comprehensive Theoretical Survey on Functionalities and Properties of Adult Neurogenesis under the Influence of Slow Oscillations

- Period: FY2019-2023

- Principal Investigator: Dr. Chi Chung Fung

5.2 Award

- Tomoki Fukai. Japanese Neural Network Society 2023 (JNNS 2023) Research Award

6. Meetings and Events

6.1 Event

OIST Computational Neuroscience Course 2023

- Date: June 19- July 6, 2023

- Venue: OIST Seaside House

- Organizers: Erik De Schutter (OIST), Kenji Doya (OIST), Tomoki Fukai (OIST), and Bernd Kuhn (OIST)

7. Other

-

Thomas Burns. Organizing committee member: ICLR 2024 (Tiny Papers Chair), AISTATS 2024 (DEI Chair), ICLR 2023 (DEI Chair)

-

Thomas Burns. Program committee member: Associative Memory & Hopfield Networks in 2023 Workshop at NeurIPS