MLDS Unit seminar, Akari Asai (University of Washington), Sean Welleck (University of Washington), Seminar Room C210

Date

Location

Description

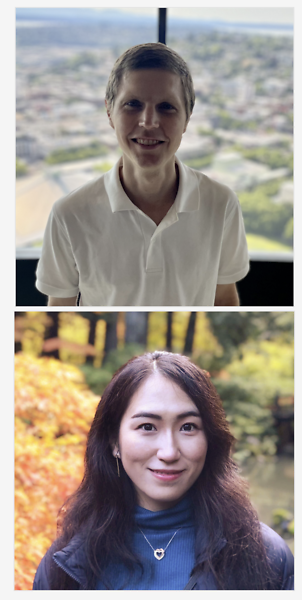

Speaker1: Akari Asai (University of Washington)

Location: Center Bldg C210

Time: 10:00am

Zoom Link: https://oist.zoom.us/j/95478318970?pwd=NjNUQVJFWmRZRGVOWTBpeFdNRUtyQT09

Title: Retrieval-based Language Models and Applications

Abstract: Language models (LMs) such as GPT-3 and PaLM have shown impressive abilities in a range of natural language processing (NLP) tasks. However, relying solely on their parameters to encode a wealth of world knowledge requires a prohibitively large number of parameters and hence massive computing, and they often struggle to learn long-tail knowledge. Moreover, these parametric LMs are fundamentally incapable of adapting over time, often hallucinate, and may leak private data from the training corpus. To overcome these limitations, there has been growing interest in retrieval-based LMs which incorporate a non-parametric datastore (e.g., text chunks from an external corpus) with their parametric counterparts. Retrieval-based LMs can outperform LMs without retrieval by a large margin with much fewer parameters, can update their knowledge by replacing their retrieval corpora, and provide citations for users to easily verify and evaluate the predictions.

In this talk, I'll present a high-level summary of our ACL2023 tutorial, retrieval-based LMs, and application, where we aim to provide a comprehensive and coherent overview of recent advances in retrieval-based LMs. I will start by first providing preliminaries covering the foundations of LMs and retrieval systems. I will then focus on recent progress in architectures, learning approaches, and applications of retrieval-based LMs.

Bio: Akari Asai is a Ph.D. student in the Paul G. Allen School of Computer Science & Engineering at the University of Washington, advised by Prof. Hannaneh Hajishirzi. Her research lies in natural language processing and machine learning. Her recent research focuses on question answering, multilingual NLP, and retrieval-augmented language models. She received the IBM Fellowship in 2022, the Nakajima Foundation Fellowship in 2019, and EECS Rising Stars in 2022. Prior to UW, she obtained a B.E. degree in Electrical Engineering and Computer Science from the University of Tokyo.

Speaker2: Sean Welleck (University of Washington)

Location: Center Bldg C210

Time: 11:00am

Zoom: https://oist.zoom.us/j/95478318970?pwd=NjNUQVJFWmRZRGVOWTBpeFdNRUtyQT09

Title: Neural theorem proving

Abstract: Interactive proof assistants enable the verification of mathematics and software using specialized programming languages. The emerging area of neural theorem proving integrates neural language models with interactive proof assistants. Doing so is mutually beneficial: proof assistants provide correctness guarantees on language model outputs, while language models help make proof assistants easier to use. This talk overviews two research threads in neural theorem proving, motivated by applications to mathematics and software verification. First, we discuss language models that predict the next step of a proof, centered around recent work that integrates language models into an interactive proof development tool. Second, we introduce the general framework of language model cascades, including recent work that uses language models to guide formal theorem proving with informal proofs. This talk was originally given as part of a tutorial at IJCAI 2023, and accompanies a set of interactive notebooks available on Github.

Bio: Sean Welleck is an incoming Assistant Professor at Carnegie Mellon University, and a Postdoctoral Scholar at the University of Washington. His research focuses on machine learning and language processing, including algorithms for neural language models and their applications to code and mathematics. His research includes an Outstanding Paper Award at NeurIPS 2021 and a Best Paper Award at NAACL 2022. Previously, he obtained B.S.E. and M.S.E. degrees at the University of Pennsylvania, and a Ph.D. at New York University advised by Kyunghyun Cho.

Subscribe to the OIST Calendar: Right-click to download, then open in your calendar application.