Hands-on Projects

In the afternoons of the second week, students will work on hands-on projects in one of four topics:

1. Psychophysics

2. MEG

3. MRI

4. Robotics

On the last day, students will present the results of their projects (about one hour for each topic).

Psychophysics

Coordinators:

Naotsugu Tsuchiya (Monash University)

Julian Matthews (Monash University)

Ai Koizumi (CiNet)

Katsunori Miyahara (Harvard University)

Elizaveta Solomonova (University of Montreal)

Students:

Nora Andermane (University of Sussex)

James Blackmon (San Francisco State University)

Anna Cattani (Istituto Italiano di Tecnologia)

Renzo Comolatti (Universidade de São Paulo)

Gokhan Gonul (Middle East Technical University)

Akihito Hasegawa (Tokyo Univ. of Pharmacy and Life Sciences)

Yuko Ishihara (University of Copenhagen)

Sridhar Rajan Jagannathan (University of Cambridge)

Sina Khajehabdollahi (University of Western Ontario)

Michiko Matsunaga (Kyoto University)

Georg Schauer (Max Planck Institute)

Outline:

The aim of this hands-on project is to introduce two exemplar visual psychophysics paradigms. By experiencing what psychophysics tasks are like, students will be able to design their own experiment. Also, students will learn some basic analysis concepts: objective performance and metacognitive sensitivity, each based on signal detection theory. A broader aim is to consider what these behavioural techniques might tell us about consciousness and related processes (such as attention, memory and metacognition). We will provide MATLAB code for building psychophysics experiments that employ a dual-task design or Rapid Serial Visual Presentation (RSVP). Students are encouraged to modify this code to examine their own research questions in collaboration with the coordinators.

Schedule:

Day 1

- Introduction to MATLAB and Psychtoolbox for visual psychophysics

- Introduction to psychophysical experiment design and coding

Day 2

- Experiment design and coding continues

Day 3

- Data collection

- Introduction to psychophysical data analysis: objective performance and metacognition

Day 4

- Data analysis continues

- Interpretation and the role of psychophysics in consciousness research

Required resources:

- Laptop (either PC or Mac)

- MATLAB & Psychophysics Toolbox version 3 (http://psychtoolbox.org/)

- Github account recommended (https://github.com/)

- RSVP repository: ISSA-rsvp

- Dual-task repository: ISSA-dual-task

Suggested reading:

- Tsuchiya, N., & Koch, C. (2016). The relationship between consciousness and top-down attention. The Neurology of Consciousness: Cognitive Neuroscience and Neuropathology, 71-91.

- Reddy, L., Wilken, P., & Koch, C. (2004). Face-gender discrimination is possible in the near-absence of attention. Journal of Vision, 4(2), 4-4.

MEG

Coordinators:

Kaoru Amano (CiNet)

Ai Koizumi (CiNet)

Kenichi Yuasa (CiNet)

Jelle P. Bruineberg (University of Amsterdam)

Students:

Carlos Alquezar Baeta (University of Zaragoza)

Vasudha Kowtha (University of Maryland)

Yulia Revina (Nanyang Technological University)

Outline:

This hands-on aims to provide an opportunity for MEG measurement and basic data analyses. In the experiment, we try to find out the neural correlates of visual awareness by analyzing the difference in MEG signals between seen and unseen trials. For this purpose, we measure MEG responses evoked by grating stimuli with high (80-100%) and low (threshold level) contrasts. Subjects judged whether they saw grating or not.

Schedule:

Day 1 & 2

- MEG measurement and preprocessing

- Detection of stimulus onsets (from photodiode signals)

- Separate trials based on contrast and stimuli

- Artifact removal

- Average trials

- Band-pass filtering

Day 3

- Compare the responses between high and low contrast stimuli, or between seen and unseen stimuli

- Peak response amplitude/latency

- Iso-countour map

Day 4

- Advanced analysis and preparation for a presentation

- Source localization

- Granger causality

Required resources:

- Each student will bring a lap top (Mac or PC, memory 4GB, disk 20GB required)

- MATLAB (e.g. trial license: https://www.mathworks.com/programs/trials)

- FieldTrip toolbox: http://www.fieldtriptoolbox.org/

Provided resources:

- For heavy computation, a shared desk-top PC is provided.

Suggested reading:

- Experimental and theoretical approaches to conscious processing. Dehaene S, Changeux JP. Neuron. 2011 Apr 28;70(2):200-27

- Oscillatory synchronization in large-scale cortical networks predicts perception. Hipp JF, Engel AK, Siegel M. Neuron. 2011 Jan 27;69(2):387-96

- Attention but not awareness modulates the BOLD signal in the human V1 during binocular suppression. Watanabe M1, Cheng K, Murayama Y, Ueno K, Asamizuya T, Tanaka K, Logothetis N. Science. 2011 Nov 11;334(6057):829-31. doi: 10.1126/science.1203161.

MRI

Coordinators:

Shinji Nishimoto (CiNet)

Satoshi Nishida (CiNet)

Students:

Ryo Aoki (RIKEN Brain Science Institute)

Elaine van Rijn (Duke-NUS Medical School)

He Zheng (Max Planck Florida Institute for Neuroscience)

Outline:

This hands-on project aims to provide experiences on human fMRI experiments and data analysis via building a decoding model of brain activity. More specifically, we will study how conscious modifications of subjective experiences could modify brain activity and see if we could decode such experiences via a brain decoding paradigm. Participants are welcome to provide ideas on what sort of conscious modifications should be tested (e.g., attention).

Schedule:

Day 1

- fMRI experiments (obtaining training data for building a decoding model)

Day 2

- fMRI experiments (obtaining more data under various cognitive conditions)

- Data analysis

Day 3

- Data analysis

Day 4

- Discussion/Preparing for a presentation

Required resources:

- Each student will bring a lap top (Mac or PC, memory 4GB, disk 20GB required)

- MATLAB (e.g. trial license: https://www.mathworks.com/programs/trials)

- SPM toolbox: http://www.fil.ion.ucl.ac.uk/spm/

Required resources:

- For heavy computation, shared desk-top PCs are provided.

Suggested reading:

- Nishimoto S, Vu AT, Naselaris T, Benjamini Y, Yu B, Gallant JL. (2011) Reconstructing visual experiences from brain activity evoked by natural movies. Current Biology, 21(19):1641-1646.

- Çukur T, Nishimoto S, Huth AG, Gallant JL. (2013) Attention during natural vision warps semantic representation across the human brain. Nature Neuroscience, 16(6):763-770.

- Huth AG, Lee T, Nishimoto S, Bilenko NY, Vu A and Gallant JL. (2016) Decoding the semantic content of natural movies from human brain activity. Front. Syst. Neurosci. 10:81.

Robotics

Students will select one of two projects depending on their skills and interests.

Introductory slides: Robotics_handson_slides.pdf

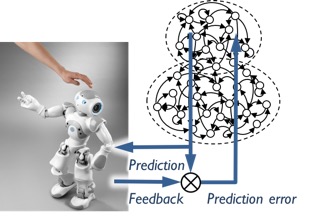

Project 1: Modeling of Predictive Coding for Self-Recognition by NAO

Coordinators:

Yukie Nagai (CiNet)

Jorge L. Copete (Osaka University)

Ray X. Lee (OIST)

Olaf Witkowski (ELSI)

Students:

David Camilo Alvarez Charris (Univ. Autnoma de Occidente)

Manuel Baltieri (University of Sussex)

Iulia Comsa (University of Cambridge)

Calum Imrie (University of Edinburgh)

Outline:

Predictive coding is a key concept regarded to explain cognitive cognitive processes in humans [1] and is, consequently, considered crucial for the acquisition of self-recognition ability. Within predictive coding framework, self-recognition is modeled in terms of aspects of one's body that are processed as probabilistically the most likely object that evokes events in the one's other sensory systems (e.g., touch, motion) that detect the state of the body [2][3]. For example, the visually observed touch on own skin is temporally congruent with touch detected by own somatosensory system and therefore they become associated with each other. In contrast, touch between two other non-corporal objects will never evoke a somatosensory event, and thus the prior probability of a somatosensory event following touch on such objects is very low.

The objective of this project is to implement and analyze a cognitive mechanism for self-recognition ability in a humanoid robot NAO. Expected achievements are:

- To familiarize with the manipulation of sensorimotor signals in humanoid robots.

- To understand the development of self-recognition and its relation with the concept of predictive coding.

- To learn to employ and analyze neural networks and robot interaction patterns for cognitive synthetic approaches.

Schedule:

- Participants will discuss how to implement the self-recognition based on concepts of predictive coding.

- Participants will perform basic configurations on software/hardware (e.g., modification of neural network parameters, creation of robot interaction patterns) to realize their proposal for self-recognition acquisition.

- Participants will carry out an interaction training with the robot and analyze the behavioral and internal development of the robot cognition.

Required skills:

Basic knowledge of Python and/or C++ language, and basic understanding of algorithmic concepts for image processing.

Required resources:

- A laptop with a working Linux distribution (e.g., Ubuntu 16.04)

Provided resources:

- humanoid robot NAO with visual, proprioceptive and tactile modalities (Refer to [4] for basics on NAO software).

- modules for color-based object detection, proprioceptive data (joint angles) extraction, and tactile detection.

- module of a Recurrent Neural Network (Refer to [5] and [6] for practical examples of RNN implementations).

- set of tools for data analysis.

Suggested reading:

- Kilner, J M., K. J. Friston, and C. D. Frith. "Predictive coding: an account of the mirror neuron system." Cognitive processing, 2007.

- Apps, Matthew AJ, and Manos Tsakiris. "The free-energy self: a predictive coding account of self-recognition." Neuroscience & Biobehavioral Reviews 41 (2014): 85-97.

- Yuji Kawai, Yukie Nagai, and Minoru Asada, "Perceptual Development Triggered by its Self-Organization in Cognitive Learning," in Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 5159-5164, October 2012

- http://doc.aldebaran.com/2-1/dev/tools/opennao.html

- Ito, Masato, and Jun Tani. "On-line imitative interaction with a humanoid robot using a dynamic neural network model of a mirror system." Adaptive Behavior 12.2 (2004): 93-115.

- Ito, Masato, et al. "Dynamic and interactive generation of object handling behaviors by a small humanoid robot using a dynamic neural network model." Neural Networks 19.3 (2006): 323-337.

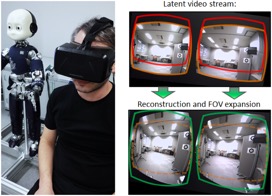

Project 2: Design of Experiment of Body Ownership Using iCub and VR

Coordinators:

Yukie Nagai (CiNet)

Konstantinos Theofilis (Osaka University)

Ray X. Lee (OIST)

Olaf Witkowski (ELSI)

Students:

Jackie Gottshall (Weill Cornell Medicine)

Felipe León (University of Copenhagen)

Gabriel Axel Montes (University of Newcastle)

Akiko Uematsu (Hokkaido University)

Outline:

Body Ownership Experience (BOE), i.e., owning and identifying with a particular body is a central aspect of self-awareness [1]. Most common type of experiments for BOE are based on the Rubber Hand illusion (RHI) and its variations [2], where participants can mistake a fake body part as their own under certain sensory conditions. The advances in the field of Virtual Reality (VR) and robotics provide many new opportunities for experimental scenarios that go beyond the classic approaches to RHI, allowing the fake body part that the subject thinks of as its own, to participate in actions and get feedback from the environment. The new capabilities let us control the robot and perceive the world from its first-person view. This allows for new questions such as:

- What are necessary conditions for extending our body to the robot? (types and timing of sensory feedback)

- How does the experience of the extended-self modify our body image?

- What is the role of actions in perceiving the extended self?

The objective of this project is to design and implement experiments to address the research questions regarding BOE

Schedule:

- Learning about existing designs of BOE/RHI experiments and how the use of a robot expands on the research capabilities in such scenarios

- Participation as subjects in preexisting BOE/RHI experiments

- Familiarization with the capabilities of the iCub, the VR headset, the sensors and the software

- Participants will design and setup their own experiments and perform the pilot testing

Required skills:

No particular technical skill is required. If a participant wants to program the robot, he/she should have basic skills in C++/Python.

Resources required:

- A laptop for data analysis

Provided hardware/software:

- The iCub humanoid robot

- Virtual reality headsets

- Assortment of other sensors (e.g., motion capture cameras, depth sensors)

- A desktop computer for each group with all the software needed for quick prototyping of experiments

- Preprogrammed building blocks for setting up experiments without extra coding

Suggested reading:

- Bermudez, J.L., Marcel, A. & Eilan, N. (ed. 1995). The body and the self. MIT Press.

- Constantini, M., & Haggard, P. (2007). The rubber hand illusion: Sensitivity and reference frame for body ownership. Consciousness and Cognition, 16, 229-240.

- Apps, M., & Tsakiris, M. (2013). The free-energy self: A predictive coding account of self-recognition. Neuroscience and Behavioural Reviews, February.

- Tamar, M., Holmes, N., & Ehrsson H.H. (2008). On the other hand: Dummy hands and peripersonal space. Behavioural Brain Research. 191:1-10.

- Tsakiris, M, Prabhu, G., & Haggard, P. (2006). Having a body versus moving your body: How agency structures body-ownership. Consciousness and Cognition. 15, 423-432.

- Kammers, M.P.M., Vignemont, F., Verhagen, L. & Dijkerman, H.C. (2009). The rubber hand illusion in action. Neurophysiologia. 47, 204-211.

- Murray, M.M. & Wallace, M.T. (ed. 2012). Multisensory perception and bodily self-consciousness. The neural bases of multisensory processes. Boca Raton (FL). CRC Press/Taylor & Francis.

- Tsakiris, M., (2010). My body in the brain: A neurocognitive model of body-ownership. Neurophysiologia. 48. 703-712.

- If a participant wants to program the robot, instead of using the prebuilt software blocks, he/she should be familiar with the YARP/iCub software (see: http://wiki.icub.org/iCub/main/dox/html/icub_tutorials.html). The software is freely available and can be download and installed on personal laptops before the summer school.