[Hybrid Seminar] "LLM (Large Language Model) + Android" by Prof. Takashi Ikegami, The University of Tokyo

Date

Location

Description

TITLE: LLM (Large Language Model) + Android

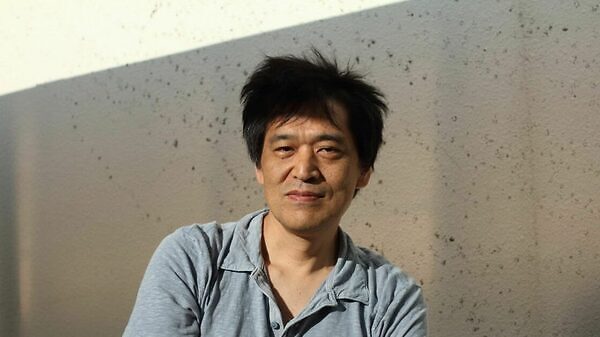

SPEAKER: Prof. Takashi Ikegami, The University of Tokyo

ABSTRACT: We successfully endowed a Large Language Model (LLM), specifically OpenAI's ChatGPT-4, with embodiment through our custom-developed android, ALTER3. Intriguingly, ALTER3 was capable of assuming various poses, such as a 'Selfie' pose, without requiring detailed instructions for each body part. Many of these poses were akin to those of top-tier pantomimes. When adjustments were needed, we could offer verbal feedback to ALTER3, much like instructing a child, rather than resorting to programming (e.g., 'Your hand is too close to your face!').

One challenge in societal implementation is the time required for real-time generation. Additionally, conversations with ALTER3 are not open-ended; they loop. We aim to propose multiple solutions to address these issues.

Subscribe to the OIST Calendar: Right-click to download, then open in your calendar application.