Adaptive Systems Group

Goal

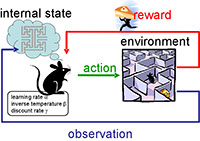

Recent advances in artificial neural networks and machine learning have allowed us to build robots and virtual agents that can learn a variety of goal-directed behaviors. However the performance of learning systems depends critically on

- the metaparameters that control how the detailed system parameters change with learning.

- the reward functions to be learned.

- the state space representation.

- the learning algorithms used by the learning agent.

In many applications human experts design and tune the above issues by trial and error. The need for careful design of them is one of the major reasons why the intelligent robots can not perform well in the real environment. Compared to current artificial learning systems, humans and animals can learn novel behaviors under a wide variety of environments under the basic constraints: self-preservation and self-reproduction.

The goal of the Adaptive Systems Group is to understand neural mechanisms necessary for the artificial agents that have the same fundamental constraints as biological agents.

Cyber Rodents

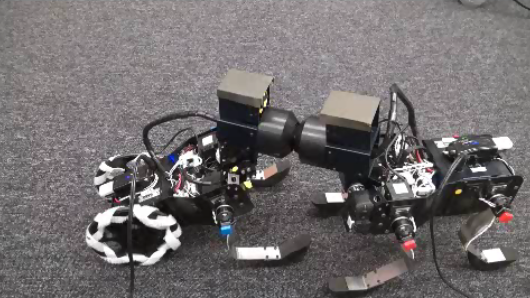

Based on the theories of reinforcement learning and evolutionary computation, we exlore parallel learning mechanisms using a colony of small rodent-like mobile robots, Cyber Rodents.

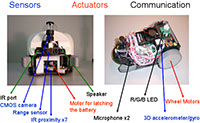

The Cyber Rodent robot has an omnidirectional vision system as its eye, infra-red proximity sensors as its whiskers, two wheels for locomotion, and a three-color LED for emotional communication. Especially the Cyber Rodent has the specific capabilities of surviving by recharging from external battery packs and reproduction in software by exchanging genes (programs or data) via an infrared communication ports.

Research Topics

We aim to develop computational frameworks for autonomously adapting these factors in biologically plausible manners. Our research topics include the following:

- Evolution of neural controllers

- Evolution of meta-parameters in reinforcement learning

- Evolution of hierarchical architectures in reinforcement learning

- Evolution of the reward function that enable efficient learning and evolution

- Cooperation and Competition of Multiple Heterogeneous Reinforcement Learners Sharing the Same Sensory-Motor System

Selected Publication

Please see our publication list for more details.