FY2018 Annual Report

Cognitive Neurorobotics Research Unit

Professor Jun Tani

From left: Takazumi, Tomoe, Nadine, Jungsik, Fabien, Minju, Jeffrey, Jun, Hendry, Ahmadreza, Hengameh, Ho Ching, Prasanna, Siqing, Dongqi, Wataru (10/11/2018)

Abstract

CognitiveNeurorobotics Research Unit started in September 2017. During the initial period of 18 months, the lab has grown with 5 new postdocs and 4 OIST graduate students. The initial research results have been mainly produced by 3 special research students (brought from KAIST) and a technical stuff as continued studies from my previous lab at KAIST. Those 3 special research students were awarded with PhD by KAIST in this spring. Some other research results came from collaborative studies with researchers at Waseda University, National Center of Neurology and Psychiatry, and Hamburg University.

1. Staff

As of March 31, 2019

- Prof. Jun Tani, Professor

- Dr. Takazumi Matsumoto, Postdoctoral Scholar

- Dr. Fabien Benureau, Postdoctoral Scholar

- Dr. Hendry Ferreira Chame, Postdoctoral Scholar

- Dr. Jeffrey Queißer, Postdoctoral Scholar

- Dr. Ahmadreza Ahmadi, Postdoctoral Scholar

- Dr. Jeffrey White, Visiting researcher (Assistant Professor, University Twente)

- Dr. Shingo Murata, Visiting researcher (Assistant Professor, National Institute of Informatics

Graduate University for Advanced Studies) - Mr. Dongqi Han, PhD Student

- Ms. Nadine Wirkuttis, PhD Student

- Mr. Wataru Ohata, PhD Student

- Mr. Prasanna Vijayaraghavan, PhD Student

- Mr. Vsevolod Nikulin, Rotation Student

- Mr. Siqing Hou, Research Intern

- Ms. Tomoe Furuya, Research Unit Administrator

2. Collaborations

2.1 Computational psychiatry on autism spectrum disorder (ASD)

- Description: Possible neuronal mechanism of ASD is investigated by conducting synthetic neurorobotics experiments using predictive coding type neural network model.

- Type of collaboration: Joint research

- Researchers:

- Dr. Yuichi Yamashita, National Center of Neurology and Psychiatry

- Prof. Tetsuya Ogata and Mr. Hayato Idei, Waseda University

- Jun Tani, OIST

2.2 Investigation on neural mechanism on lifelong learning

- Description: We investigate specialized neural network mechanisms which are required for good adaptation to novel sequential experience while preventing disruptive interference with existing representations in the context of lifelong learning.

- Type of collaboration: Joint research

- Researchers:

- Prof. Stefan Wermter and Dr. German Parisi, University Hamburg

- Jun Tani, OIST

2.3 Phenomenological analysis on subjective experience of consciousness and selves

- Description: We investigate possible embodied mind structures accounting for consciousness and selves through phenomenological analysis on experimental results of cognitive neurorobots.

- Type of collaboration: Joint research

- Researchers:

- Prof. Jeff White, University of Twente

- Jun Tani, OIST

3. Activities and Findings

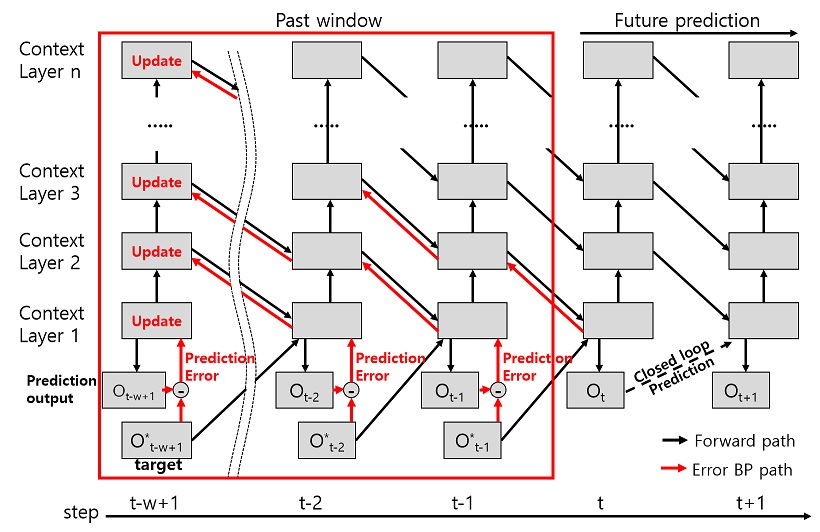

3.1 Predictive coding for perception of video image

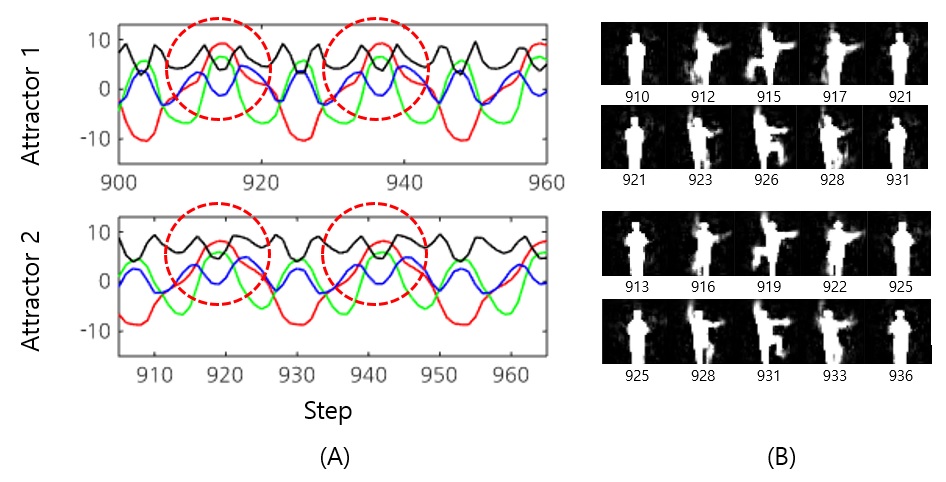

This study proposes a novel predictive coding type neural network model, the predictive multiple spatio-temporal scales recurrent neural network (P-MSTRNN). The P-MSTRNN learns to predict visually perceived human whole-body cyclic movement patterns by exploiting multiscale spatio-temporal constraints imposed on network dynamics by using differently sized receptive fields as well as different time constant values for each layer. After learning, the network becomes able to proactively imitate target movement patterns by inferring or recognizing corresponding intentions by means of the regression of prediction error. Simulation results on imitative learning of visual human movement patterns showed that the network can develop a functional hierarchy by developing a different type of dynamic structure at each layer. We examined how model performance during pattern generation as well as predictive imitation varies depending on the stage of learning. The number of limit cycle attractors corresponding to target movement patterns increases as learning proceeds. And, transient dynamics developing early in the learning process successfully perform pattern generation and predictive imitation tasks. We concluded that exploitation of transient dynamics facilitates successful task performance during early learning periods. In my knowledge this study shows first time that pixel-level video image can be generated and recognized by self-organizing functional hierarchy and compositionality through learning by taking advantage of multiple spatio-temporal scale constraint imposed on the neuro-dynamic process in the proposed model.

Figure 1: Schematic of predictive imitation by error regression in P-MSTRNN model.

Figure 2: Generation of learned two different video image sequence patterns and their corresponding neural attractor dynamics.

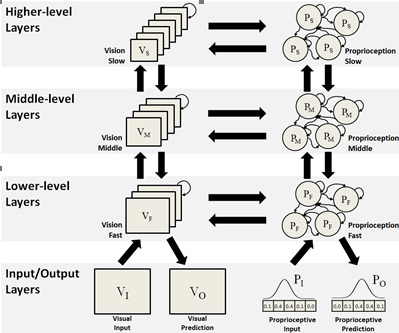

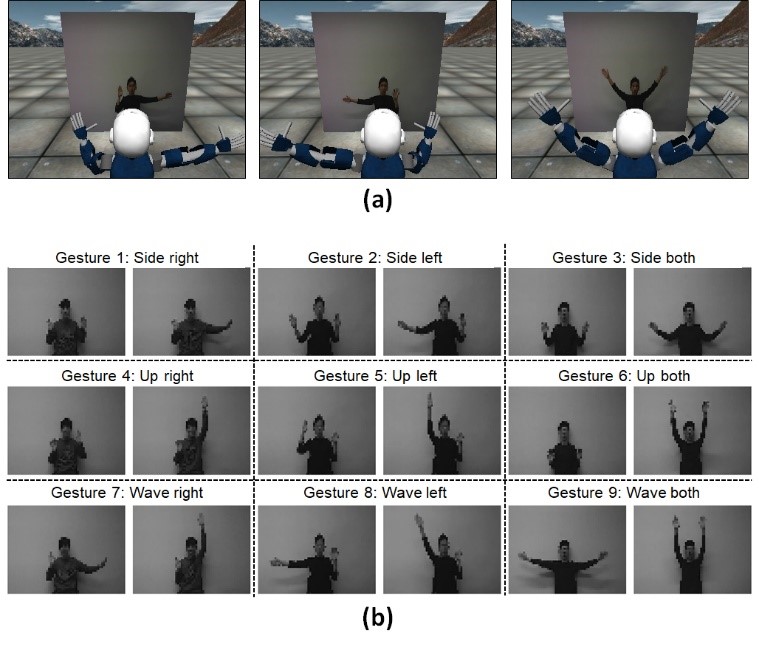

3.2 Imitative interaction between a human and a simulated robot using predictive coding type deep neural network model

This study aims to investigate how adequate cognitive functions for recognizing, predicting and generating a variety of actions can be developed through iterative learning of action-caused dynamic perceptual patterns. Particularly, this study examined the capabilities of mental simulation of own actions as well as inference of others’ intention because they play a crucial role, especially in social cognition. We propose a dynamic neural network model based on predictive coding which can generate and recognize dynamic visuo-proprioceptive patterns. Particularly, P-MSTRNN mentioned in the section 3.1 was used as a part of the current model. The proposed model was examined by conducting a set of robotic simulation experiments in which a robot was trained to imitate visually perceived gesture patterns of human subjects in a simulation environment. The experimental results showed that the proposed model was able to develop a predictive model of imitative interaction through iterative learning of large-scale spatio-temporal patterns in visuo-proprioceptive input streams. Also, the experiment verified that the model was able to generate mental imagery of dynamic visuo-proprioceptive patterns without feeding external inputs. Furthermore, the model was able to recognize intention of others by minimizing prediction error in observation of the others’ action patterns in an online manner. These findings suggest that the error minimization principle in predictive coding could provide a primal account for the mirror neuron functions for generating own actions as well as recognizing those generated by others in the social cognitive context.

Figure 3: The proposed visuo-proprioceptive predictive coding type large-scale recurrent neural network model.

Figure 4: The experiment setting with a simulated iCub robot that learns to imitate visually demonstrated human movement patterns.

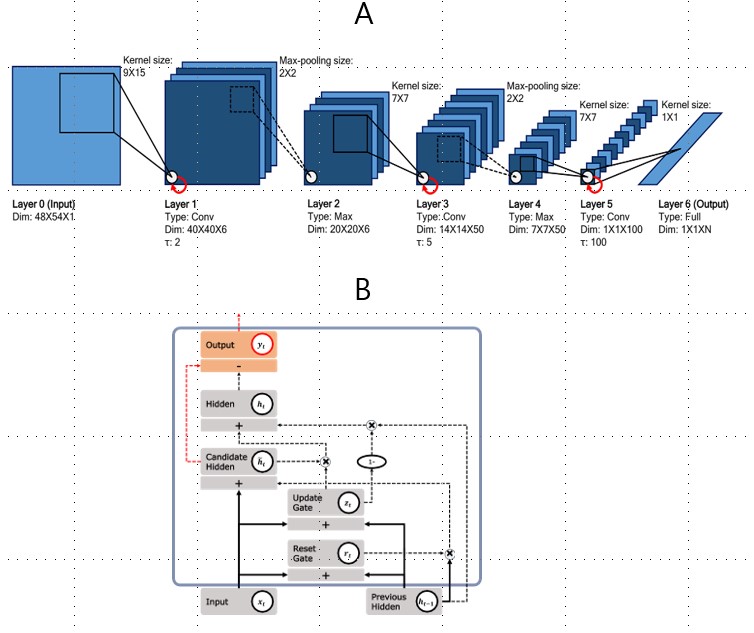

3.3 Adaptive detrending to accelerate convolutional gated recurrent unit training for contextual video recognition

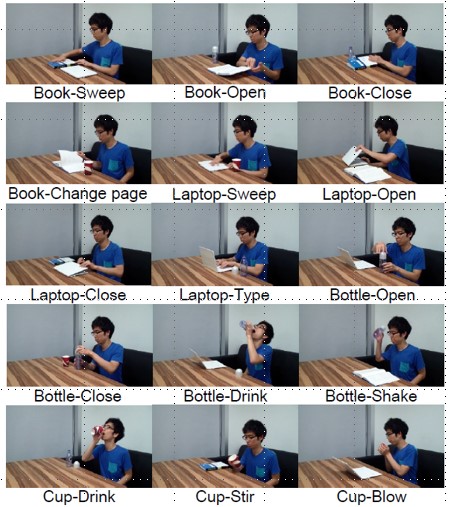

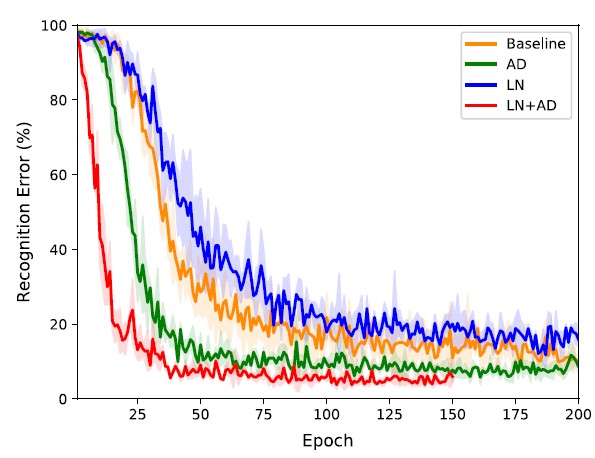

The current study proposes ‘‘adaptive detrending’’ (AD) for temporal normalization in order to accelerate the training of ConvRNNs, especially of convolutional gated recurrent unit (ConvGRU). For each neuron in a recurrent neural network (RNN), AD identifies the trending change within a sequence and subtracts it, removing the internal covariate shift. In experiments testing for contextual video recognition with ConvGRU, results showed that (1) ConvGRU clearly outperforms feed-forward neural networks, (2) AD consistently and significantly accelerates training and improves generalization, (3) performance is further improved when AD is coupled with other normalization methods, and most importantly, (4) the more long-term contextual information is required, the more AD outperforms existing methods.

Figure 5: Schematics of (A) the proposed whole architecture and (B) gated recurrent unit (GRU) with adaptive detrending (AD).

Figure 6: An example of video image for object-directed actions used for training and testing the proposed neural network model.

Figure 7: Graph of test recognition error for categorizing video image patterns related to human object-directed behavior. It can be seen that our proposed model (indicated by red LN+AD) outperforms others significantly.

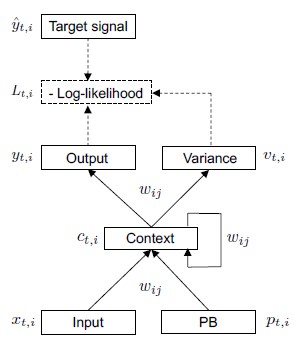

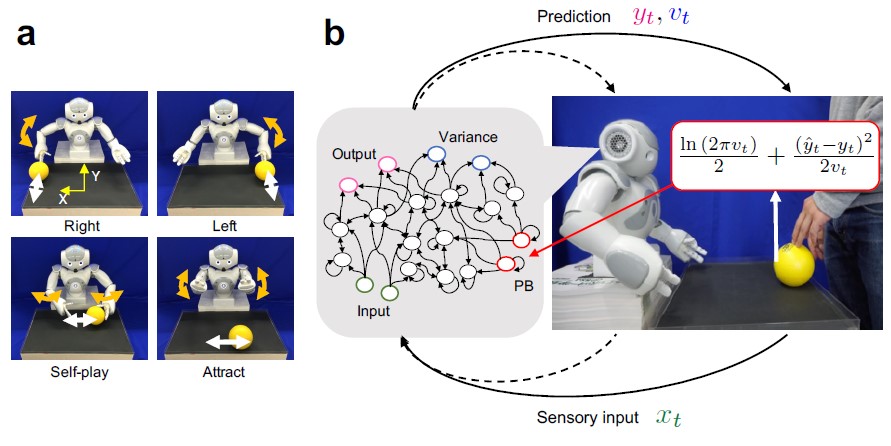

3.4 A neurorobotics simulation of autistic behavior induced by unusual sensory precision

Using a humanoid robot controlled by a neural network using a precision-weighted prediction error minimization mechanism, this study suggests that both increased and decreased sensory precision could induce the behavioral rigidity characterized by resistance to change that is characteristic of autistic behavior. Specifically, decreased sensory precision caused any error signals to be disregarded, leading to invariability of the robot’s intention, while increased sensory precision caused an excessive response to error signals, leading to fluctuations and subsequent fixation of intention. The results provides a system-level explanation of mechanisms underlying different types of behavioral rigidity in autism spectrum and other psychiatric disorders. In addition, the current findings suggest that symptoms caused by decreased and increased sensory precision could be distinguishable by examining the internal experience of patients and neural activity coding prediction error signals in the biological brain.

Figure 8: The stochastic CTRNN model used in the current study has five groups of neural units: input, context, output, variance, and PB units. Input neural units receive current sensory inputs. Based on the inputs, PB state, and context state, the S-CTRNN generates predictions about the mean and variance of future inputs in the output and variance units, respectively.

Figure 9: The robotics experimental setting introduced in the current study. A) Four interactive behavioral patterns learned by a robot controlled by an S-CTRNN with PB. The upper left and upper right figures show the right and left behaviors, respectively. The lower left and lower right figures show the self-play and attract behaviors. B) System overview during adaptive interaction between a robot and an experimenter.

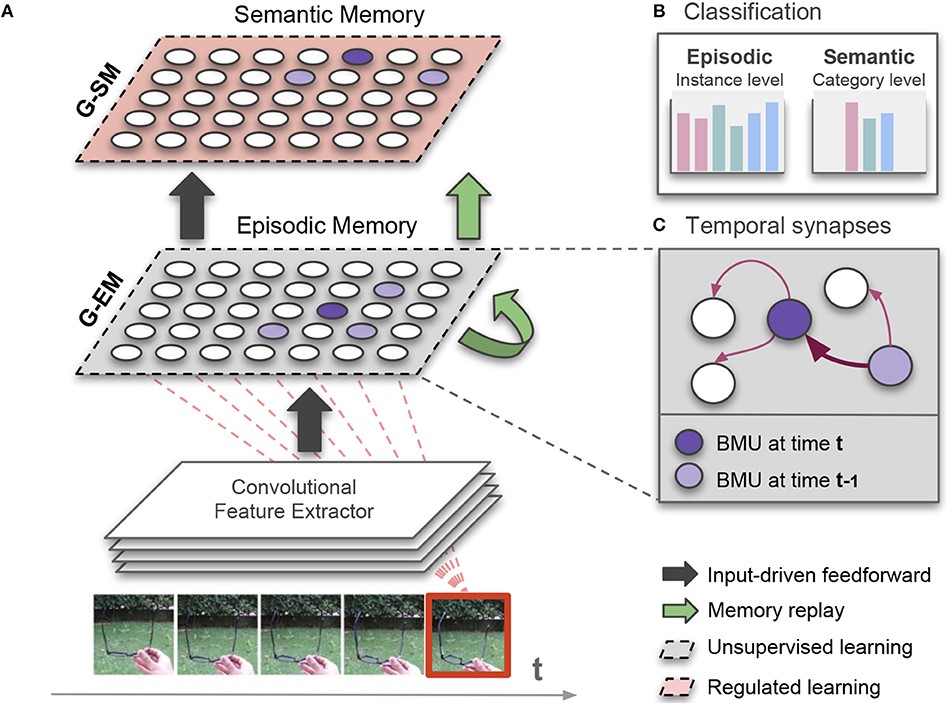

3.5 Neural mechanism on lifelong learning

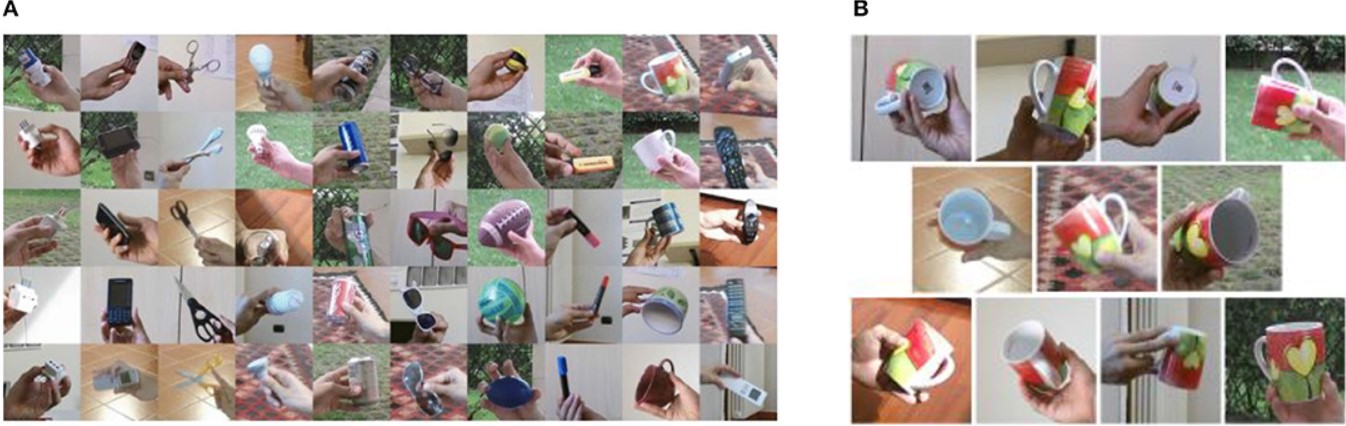

The ability to learn from continuous streams of information is referred to as lifelong learning and represents a long-standing challenge for neural network models due to catastrophic forgetting in which novel sensory experience interferes with existing representations and leads to abrupt decreases in the performance on previously acquired knowledge. In the current study, we proposed a dual-memory self-organizing architecture for lifelong learning scenarios. The architecture comprises two growing recurrent networks with the complementary tasks of learning object instances (episodic memory) and categories (semantic memory). Both growing networks can expand in response to novel sensory experience: the episodic memory learns fine-grained spatiotemporal representations of object instances in an unsupervised fashion while the semantic memory uses task-relevant signals to regulate structural plasticity levels and develop more compact representations from episodic experience. For the consolidation of knowledge in the absence of external sensory input, the episodic memory periodically replays trajectories of neural reactivations. We evaluated the proposed model on the CORe50 benchmark dataset for continuous object recognition, showing that the model significantly outperforms existing other methods of lifelong learning in three different incremental learning scenarios.

Figure 10: The dual-memory self-organizing architecture proposed for modeling of lifelong learning.

Figure 11: The CORe50 benchmark dataset used for evaluation of the proposed model in a continuous object recognition task.

4. Publications

4.1 Journals

- Parisi, I. G., Tani, J., Weber, C., & Wermter, S. Lifelong Learning of Spatiotemporal Representations with Dual-Memory Recurrent Self-Organization. Frontiers in Neurorobotics, 12:78 (2018).

- Zhong, J., Peniak, M., Tani, J., Ogata, T., & Cangelosi, A. Sensorimotor input as a language generalisation tool: a neurorobotics model for generation and generalisation of noun-verb combinations with sensorimotor inputs. Autonomous Robots, 1-20 (2018).

- Idei, H., Murata, S., Chen, Y., Yamashita, Y., Tani, J., & Ogata, T. A Neurorobotics Simulation of Autistic Behavior Induced by Unusual Sensory Precision. Computational Psychiatry, 2, 164-182 (2018).

- Jung, M., Lee, H., & Tani J. Adaptive Detrending to Accelerate Convolutional Gated Recurrent Unit Training for Contextual Video Recognition. Neural Networks, 105, 356-370 (2018).

- Hwang, J., Kim, J., Ahmadi, A., Choi, M., & Tani, J. Dealing With Large-Scale Spatio-Temporal Patterns in Imitative Interaction Between a Robot and a Human by Using the Predictive Coding Framework. IEEE Transactions on Systems, Man, and Cybernetics: Systems, (99), 1-14 (2018).

- Choi, M., & Tani, J. Predictive Coding for Dynamic Visual Processing: Development of Functional Hierarchy in a Multiple Spatio-Temporal Scales RNN Model. Neural Computation, 30, 237-270 (2018).

4.2 Books and refereed conference proceedings

- Hwang, J., Wirkuttis, N., & Tani, J. A Neurorobotics Approach to Investigating the Emergence of Communication in Robots. 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, South Korea, March 11-14 (2019) DOI: 10.1109/HRI.2019.8673214.

- Tani, J. Accounting for the Minimal Self and the Narrative Self: Robotics Experiments Using Predictive Coding, TOCAIS19 AAAI Spring Symposium “Towards conscious AI systems”, Stanford, USA, March 25 - 27 (2019).

- 谷淳, 脳型ロボット研究に基づく意識及び自由意志の統合的な理解, ベルクソン『物質と記憶』を再起動する 拡張ベルクソン主義の諸展望,書肆心水 (2018).

- Huang, J., & Tani, J. Visuomotor Associative Learning under the Predictive Coding Framework: a Neuro-robotics Experiment, The 28th Annual Conference of the Japanese Neural Network Society (JNNS2018), Okinawa, Japan, October 24 – 27 (2018).

- Wirkuttis, N., Hwang, J., & Tani, J. Spontaneous Shifts of Social Alignment in Synthetic Robot-Robot Interactions, BODIS 2018: Body, Interaction, Self International Conference on Intelligent Robots and Systems 2018, Madrid, Spain, October 1 (2018).

- Huang, J., & Tani, J. A Dynamic Neural Network Approach to Generating Robot’s Novel Actions: A Simulation Experiment, Ubiquitous Robots 2018, Hawaii, USA, June 26 - 30 (2018).

4.3 Invited seminars and talks

- Tani, J. Exploring normal and abnormal development of cognitive constructs by conducting experimental studies in synthetic neurorobotics, IRCN Neuro-inspired Computation Course, Tokyo, Japan, March 21 – 24 (2019).

- Tani, J. Synthetic neurorobotics experiment studies toward understanding mechanisms of social cognition, SoAIR2019 JST-CREST/IEEE-RAS Spring School on “Social and Artificial Intelligence for User-Friendly Robots”, Shonan, Japan, March 17 - 24 (2019).

- Tani, J. ロボット構成論的アプローチで考える身体的自己と物語的自己について, 第1回公開シンポジウム 自己をめぐる冒険~現象学・ロボティクス・神経科学・精神医学の境界を超えて~, Tokyo, Japan, February 20 - 21 (2019).

- Tani, J. Synthetic neurorobotics experiment studies toward understanding mechanisms of social cognition, MEXT Grant-in-Aid for Scientific Research on Innovative Areas: Evolinguistics and OIST Joint Workshop, Okinawa, Japan, February 18 – 19 (2019).

- Tani, J. Exploring robotic minds: actions, symbols, and consciousness as self-organizing dynamic phenomena, 2018年度 第10回在日科協碩博セミナー “Robotics&AI(第四次産業革命の展望の中で)”, Tokyo, Japan, October 13 (2018).

- Tani, J. Exploring Robotic Minds by Using the Predictive Coding Framework, Seminar at Humboldt University, Berlin, Germany, September 26 (2018).

- Tani, J. Mind wandering of robots for future and past by predictive coding-type recurrent neural network models, Bernstein Conference 2018 Satellite Workshops, Berlin, Germany, September 25 - 28 (2018).

- Tani, J. Emergentist Account for Non-Reductive Consciousness: From Neurorobotics Study, Workshop for the Synthetic Approach to Biology and the Cognitive Sciences, ALIFE2018, Tokyo, Japan, July 23 - 27 (2018).

- Tani, J. Exploring Robotic Minds by Using the Predictive Coding Principle, Eighth International Symposium on Biology of Decision Making, Satellite workshop, Paris, France, May 24 (2018).

5. Intellectual Property Rights and Other Specific Achievements

Nothing to report

6. Meetings and Events

6.1 Seminars

End to end approach for behavior generation in robot systems

- Date: May 7, 2018

- Venue: OIST Campus Lab 1, C016

- Speaker: Prof. Tetsuya Ogata (Waseda University)

Performance disruption and predictive processing in expert sensorimotor skills

- Date: June 7, 2018

- Venue: OIST Campus Lab 1, C015

- Speaker: Dr. Massimiliano L. Cappuccio (Cognitive Science at UAE University)

Neurorobotics in the context of The Human Brain Project

- Date: September 10, 2018

- Venue: OIST Campus Lab 1, C016

- Speaker: Dr. Florian Röhrbein (Alfred Kärcher GmbH & Co. KG / Technical University of Munich)

Body perception under the free energy formulation for robots and humans

- Date: September 21, 2018

- Venue: OIST Campus Lab 1, D014

- Speaker: Dr. Pablo Lanillos (Technical University of Munich)

Developmental Robotics for Language Learning, Trust and Theory of Mind

- Date: September 21, 2018

- Venue: OIST Campus Lab 1, D014

- Speaker: Prof. Angelo Cangelosi (University of Manchester and University of Plymouth)

Neuromorphic Dynamics towards Symbiotic Society - an overview, neural computation, artificial pain, and beyond -

- Date: February 19, 2019

- Venue: OIST Campus Lab 1, C015

- Speaker: Prof. Minoru Asada (Osaka University)

Why are babies better at learning a language than grown-ups?

- Date: February 20, 2019

- Venue: OIST Campus Lab 1, C016

- Speaker: Dr. Reiko Mazuka (RIKEN Center for Brain Science)

Skill and choice – implication from stroke rehabilitation

- Date: February 22, 2019

- Venue: OIST Campus Center Bldg, C209

- speaker: Prof. Rieko Osu (Waseda University)

Tensor Decomposition and Tensor Networks and their applications, especially in Brain Computer Interface and recognition of Human Emotions

- Date: March 4, 2019

- Venue: OIST Campus Lab 1, C016

- speaker: Prof. Andrzej Cichocki (Skolkovo Institute of Science and Technology)

6.2 Events

The 28th Annual Conference of the Japanese Neural Network Society (JNNS2018)

- Date: October 24 - 27, 2018

- Venue: OIST Main Campus

- Co-organizers:

Kenji Doya, OIST

Ryuta Miyata, University of the Ryukyus

Akira Takashi Sato, NIT, Okinawa College

Akira Masumi, NIT, Okinawa College

Kazuki Miyazato, NIT, Okinawa College

Naoto Tome, NIT, Okinawa College

Hiromichi Tsukada, OIST

7. Other

Nothing to report.